Introduction

The field of artificial intelligence (AI) has experienced remarkable growth and evolution in recent years, driven by advancements in machine learning techniques, increased computational power, and the availability of vast amounts of data. One of the most significant developments in this domain has been the emergence of large language models (LLMs), which have demonstrated impressive capabilities in natural language processing tasks such as text generation, summarization, and question-answering.

As LLMs and other AI models continue to grow in size and complexity, the demand for powerful hardware accelerators, such as B200, NVIDIA's H100, and GH200 GPUs, as well as solutions from companies like Cerebras (WSE-3) and Groq, has skyrocketed.

This growth in AI capabilities has been accompanied by a surge in investments from governments, venture capitalists, and individual investors, driven by the promise of better returns on investment (ROI) in this burgeoning field and taking humanity to the next level with AGI. The democratization of AI, which aims to make these advanced technologies accessible to a broader range of users and organizations, has also gained significant traction.

Recent news of substantial investments in AI and GPU cloud services underscores the growing recognition of the importance of these technologies. For example, the Indian government announced the investment of 10,000 GPUs to build the sovereign AI cloud supercomputer. Similarly, investors like Nat & Friedman running their own AI clusters such as Andromeda and so on.

In this article, we will explore more about AI Cloud, why we need one in more detail, and how to build AI Cloud.

What is AI Cloud?

AI Cloud refers to a cloud computing infrastructure specifically designed and optimized for the deployment, management, and scaling of AI and machine learning workloads. It combines the power of cloud computing with specialized hardware accelerators, such as GPUs and other AI-focused chips, as well as software tools and frameworks tailored for AI applications. It is different from hyper scalers such as AWS, Google Cloud, or Azure in way that it is meant for one purpose which is to best serve AI workload. They are meant to be cost-effective, particularly for large distributed training jobs, and they intend to democratize AI by simplification.

Following are the functionalities of the AI cloud that one should expect:

- Ability to manage data that will be used for training purposes. It includes data collection, ingestion, analysis, and curation.

- Model training and evaluation

- Deployment of model (inferencing)

- Hypertuning

- Model monitoring

- Security

What is the need for a specialized AI Cloud?

The benefits and use cases of AI Cloud highlight the increasing demand for such specialized cloud services. Here are some key advantages and applications:

Benefits

-

Sovereign AI Cloud: AI Cloud can offer enhanced data sovereignty and control, allowing organizations to keep their data and AI operations within their secure cloud environment, adhering to local regulations and privacy requirements.

-

Access to cutting-edge models: While open-source AI models are valuable, the most advanced and powerful models are often closed-source and proprietary. AI Cloud providers can offer access to these state-of-the-art models, enabling organizations to leverage their capabilities without the need for extensive in-house development.

-

Lack of operational skills: Managing AI infrastructure can be complex and resource-intensive, requiring specialized skills in areas such as GPU optimization, model deployment, and scalability. AI Cloud services can abstract away much of this complexity, enabling organizations to focus on their core AI initiatives without the need for extensive in-house operational expertise.

-

Reducing costs and improving security: By leveraging the economies of scale and shared resources of cloud providers, organizations can significantly reduce their AI infrastructure costs while benefiting from enhanced security measures and best practices implemented by cloud providers.

-

AI + Cloud Native: AI Cloud services are often designed with cloud-native principles in mind, enabling seamless integration with other cloud services and facilitating the development of scalable, resilient, and distributed AI applications. You can read more about how cloud native technologies accelerate AI here.

-

Democratization of AI: By lowering the barriers to entry and providing accessible AI resources, AI Cloud services contribute to the democratization of AI, enabling smaller organizations and individuals to leverage these powerful technologies.

-

Supply chain issues and GPU availability: With the increasing demand for GPUs and other specialized hardware, AI Cloud services can help organizations overcome supply chain challenges by innovative GPU scheduling and resource-sharing techniques and ensure access to the necessary compute resources.

Use Cases

-

GPU Cloud for Datacenters: AI Cloud services can help datacenters build and provide innovative solutions for their customers so that can maximize their hardware investment and also provide value to the end customers. Various traditional data centers have a plethora of computing but the software part has been lagging. Bringing cloud native technologies to build a stack that can compete with larger cloud players is possible by providing similar or certain categories, a much better user experience, and pricing.

-

Large enterprises building internal AI Cloud Platforms: Many large enterprises are developing their own internal AI Cloud Platforms to address specific use cases and requirements such as security, compliances, and IP protection. Another factor to consider here is the cost, with AI, the cloud cost can skyrocket. By utilizing cloud native and open source stack, combined with platform engineering, enterprises can give the best experience to developers and internal stakeholders.

-

Air-gapped environments: Organizations operating in highly secure or air-gapped environments can benefit from sovereign AI Cloud services by deploying private, on-premises AI clouds, ensuring data and model security while still leveraging the benefits of cloud-based AI infrastructure. This can be strengthened by implementing advanced techniques like confidential computing which is supported by NVIDIA hopper architecture.

-

Virtual machines (VMs) with GPU access for data scientists and ML engineers: very simple use case but need of the hour for every business. Their engineers need access to larger machines and powerful GPUs. Not many companies want to do this by putting a budget in CAPEX but rather utilize AI Cloud service that offers GPU-accelerated VMs tailored for data scientists and machine learning engineers, providing them with the necessary compute resources for model development, training, and experimentation.

-

AI Platforms: By abstracting away the complexities of model deployment and management, AI Cloud services can enable organizations to deploy large language models (LLMs) and other AI models with a simple one-click process, streamlining the deployment workflow and reducing operational overhead.

How to build AI Cloud

As we explore the concept of building an AI Cloud, it is important to revisit the synergies between AI and cloud-native technologies. Cloud-native principles, such as containerization, microservices, and automated scaling, are highly relevant to AI workloads, enabling efficient deployment, management, and scalability of AI models and pipelines.

We would like to share our experience based on the extensive research and hands-on experience building one.

Infrastructure Layer

The hardware and infrastructure layer is the most critical part of AI due to its high compute and bandwidth demand, reliance, and reliability requirements. The processing power of CPU and GPUs, network bandwidth, storage performance, and how power efficient the whole system is, play an important role in the success of AI cloud. For example, as a business, you don’t want to screw up a distributed training job that is running on hundreds of expensive GPUs at the last moment or not fully utilizing its potential, it could lead to a massive waste of compute resources involved here.

Compute & Network: One can build the stack using bare metal servers or virtual machines (VMs) in on-prem or the cloud. Bare metal is more performance though. The compute nodes are connected with high-performance networks such as RDMA and InfiniBand for efficient data transfer and high-speed communication.

GPUs: It is the heart of the AI and the major differentiator. Essentially, a pool of a variety of GPUs are required depending on the performance and their capabilities. Furthermore, additional GPU scheduling and optimization strategies through the use of orchestration layers and drivers are required. Please note that some of the features may require additional licenses from the vendor such as enabling fractional GPUs.

Storage: High-speed storage solutions for handling large datasets and model artifacts. Both types of storage are essential – block storage and object storage backed by technologies such as Ceph and Minio.

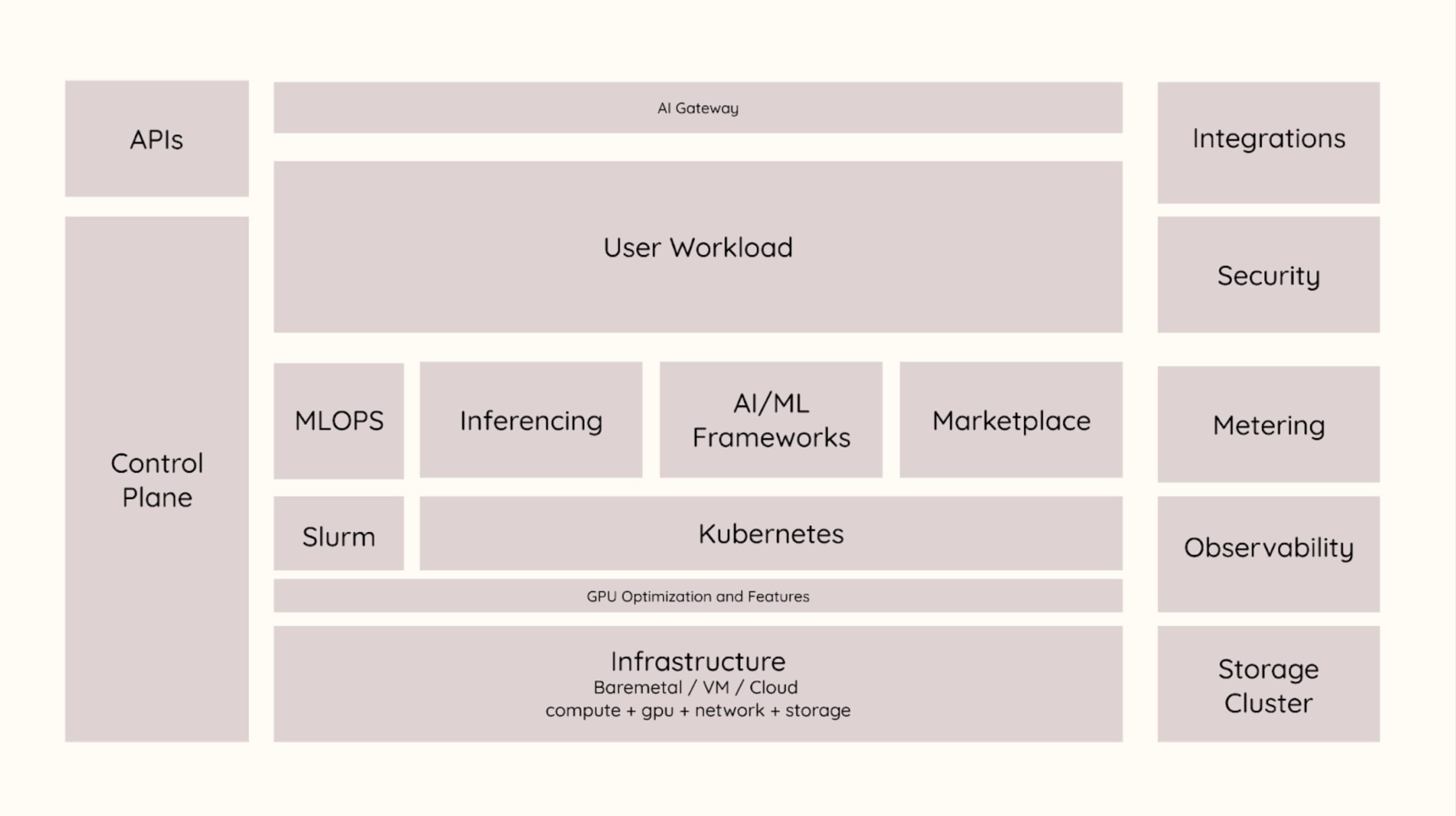

Orchestration Layer

Kubernetes is the de facto standard for orchestration purposes. The ecosystem is broad and extensible, it is the most flexible option we suggest. In some cases, Slurm is preferred in HPC, which is a fault-tolerant and scalable cluster management and job scheduling system.

Control Plane:

-

Custom control plane for managing and orchestrating cloud workloads and requests.

-

Responsible for resource allocation, scheduling, and automation.

Building Blocks

There are various building blocks that forms the overall cloud. This is not an extensive list but some of the technologies we have used in our solutions.

- KubeVirt: It empowers virtualization atop Kubernetes, facilitating the execution of AI workloads within containerized environments with enhanced flexibility and resource utilization.

- Ray or Kubeflow: These frameworks serve as powerful tools for managing distributed AI and ML workloads on Kubernetes, streamlining the orchestration process, and optimizing resource allocation.

- MLflow: Essential for model management and deployment, MLflow offers robust capabilities for tracking experiments, packaging code, and deploying models seamlessly within the AI cloud environment.

- Vector databases: Critical for efficient storage and retrieval of high-dimensional data, vector databases play a pivotal role in supporting AI applications that require complex data structures.

- Inferencing engines: Utilizing cutting-edge solutions like vLLM and Triton, we ensure optimized model serving within the AI cloud, delivering high-performance inferencing capabilities to meet diverse workload requirements.

- Monitoring and logging: Employing observability and troubleshooting tools, we maintain a comprehensive view of AI workloads, enabling proactive monitoring, detection, and resolution of potential issues. See our observability offering.

- Security and audit logging: Prioritizing data protection and compliance, we implement robust security measures and audit logging mechanisms to safeguard sensitive information and ensure regulatory compliance within the AI cloud environment.

- RAG (Retrieval-Augmented Generation): Leveraging advanced techniques like RAG, we enhance the capabilities of large language models, enabling them to generate more contextually relevant and accurate responses.

- Serverless inferencing: Embracing serverless architecture for on-demand and scalable model serving, we optimize resource utilization and ensure cost-effectiveness in delivering inferencing capabilities to users.

- GPU Optimization:

- Partitioning: By efficiently sharing GPU resources among multiple workloads, we maximize resource utilization and enhance overall system performance.

- Fractional GPUs: Implementing fractional GPU allocation strategies, we optimize GPU utilization by dynamically allocating fractions of GPU resources based on workload requirements.

- User interface (UI): Providing intuitive web-based or command-line interfaces, we empower users to seamlessly manage and interact with the AI cloud, facilitating ease of use and enhancing productivity.

- Billing or Metering: offering a service without a proper metering would make the revenue officer and the consumers of the service unhappy. Have a solid usage based metering.

- Multi-tenancy: design a system that allows hard isolation with quotas and limits defined for each tenant to make it more reliable and secured.

Our design principle when building a cloud is to minimize friction and enable engineers and other stakeholders to move swiftly in the new era of AI.

Reference Architecture for AI Cloud

The following reference architecture provides a high-level overview of the key components involved in building an AI Cloud. Please note that this is a simplified representation, and we encourage readers to schedule a meeting with our experts for a more detailed discussion and tailored guidance.

Key Challenges in Building AI Cloud

- Complexity Across Multiple Technologies: Building an AI cloud entails integrating diverse technologies spanning cloud-native solutions, AI/ML frameworks, and infrastructure components. Negotiating this complexity demands a comprehensive understanding of each domain and the ability to orchestrate them harmoniously.

- Depth and Breadth of Skills: Successfully addressing the multifaceted challenges of AI cloud construction requires profound expertise that extends across a wide spectrum of disciplines. From cloud architecture to machine learning algorithms, proficiency in various domains is indispensable for ensuring the seamless operation and performance of the AI cloud.

- Learnings from Past Experiences: Leveraging the experiences and insights of those who have previously embarked on similar endeavors can significantly expedite the development process. By standing on the shoulders of those who have already navigated the intricacies of AI cloud construction, organizations can accelerate their time to market and mitigate potential pitfalls.

- Optimizing GPU Utilization: Given the pivotal role of GPUs in powering AI workloads, optimizing their utilization is paramount for maximizing performance and cost efficiency. This necessitates implementing strategies and techniques to ensure that GPU resources are allocated and utilized optimally across various workloads.

- Ensuring Reliability and High Availability: Building a reliable AI cloud service is imperative, as downtime can have huge repercussions, both operationally and financially. With the high cost associated with maintaining AI clusters, minimizing downtime becomes paramount to safeguarding against potential losses and maintaining operational continuity.

By addressing these challenges with precision and expertise, organizations can forge ahead in their goal to establish robust and efficient AI cloud infrastructures.

Conclusion

The rapid advancements in AI, driven by cutting-edge research, increased computational power, and growing investments, have created a pressing need for specialized cloud infrastructure tailored to the unique requirements of AI workloads. AI Cloud services offer a compelling solution by providing scalable, on-demand access to AI-optimized hardware and software resources, enabling organizations to develop, train, and deploy AI models efficiently and cost-effectively.

By leveraging AI Cloud, organizations can overcome challenges such as lack of operational expertise, resource constraints, and supply chain issues, while benefiting from enhanced data sovereignty, access to state-of-the-art models, and the democratization of AI capabilities.

Building an AI Cloud involves a careful orchestration of various components, including powerful hardware accelerators, high-performance networking and storage, containerization and orchestration platforms, specialized AI frameworks and tools, and robust security and monitoring measures. The reference architecture presented in this article offers a high-level blueprint for constructing an AI Cloud, highlighting the key layers and enablers required for a successful implementation.

As AI continues to transform industries and drive innovation, adopting AI Cloud services is poised to accelerate, empowering organizations to harness the full potential of these transformative technologies while mitigating the complexities and challenges associated with managing AI infrastructure in-house.

Book a free call with us to go deeper how we are building AI Cloud. We are excited to talk to you.

Read more:

- Deploy LLMs on Kubernetes using OpenLLM

- Optimizing NVIDIA GPUs with Partitioning in Kubernetes

- Taking AI ML Ideas to Production

Unlock the power of AI with tailored AI Cloud services—scale, innovate, and deploy smarter.

Explore our AI Cloud consulting to build your competitive edge today!