Introduction

The work of SREs have been transformed by the rapid advancements in AI solutions. As the complexity of modern, cloud-native infrastructure continues to grow, SREs are facing an ever-increasing volume and velocity of data to monitor and analyze. Traditional, manual approaches to troubleshooting and incident response are no longer scalable, leading to the rise of AI-powered solutions.

Specific to Observability, one of the key advantages of AI-powered observability bots is their ability to leverage the vast knowledge accumulated in open-source communities. In many cases, the answers to common observability challenges faced by SREs are already available in the form of documentation, GitHub issues, and online forums. However, sifting through this information manually can be time-consuming and inefficient.

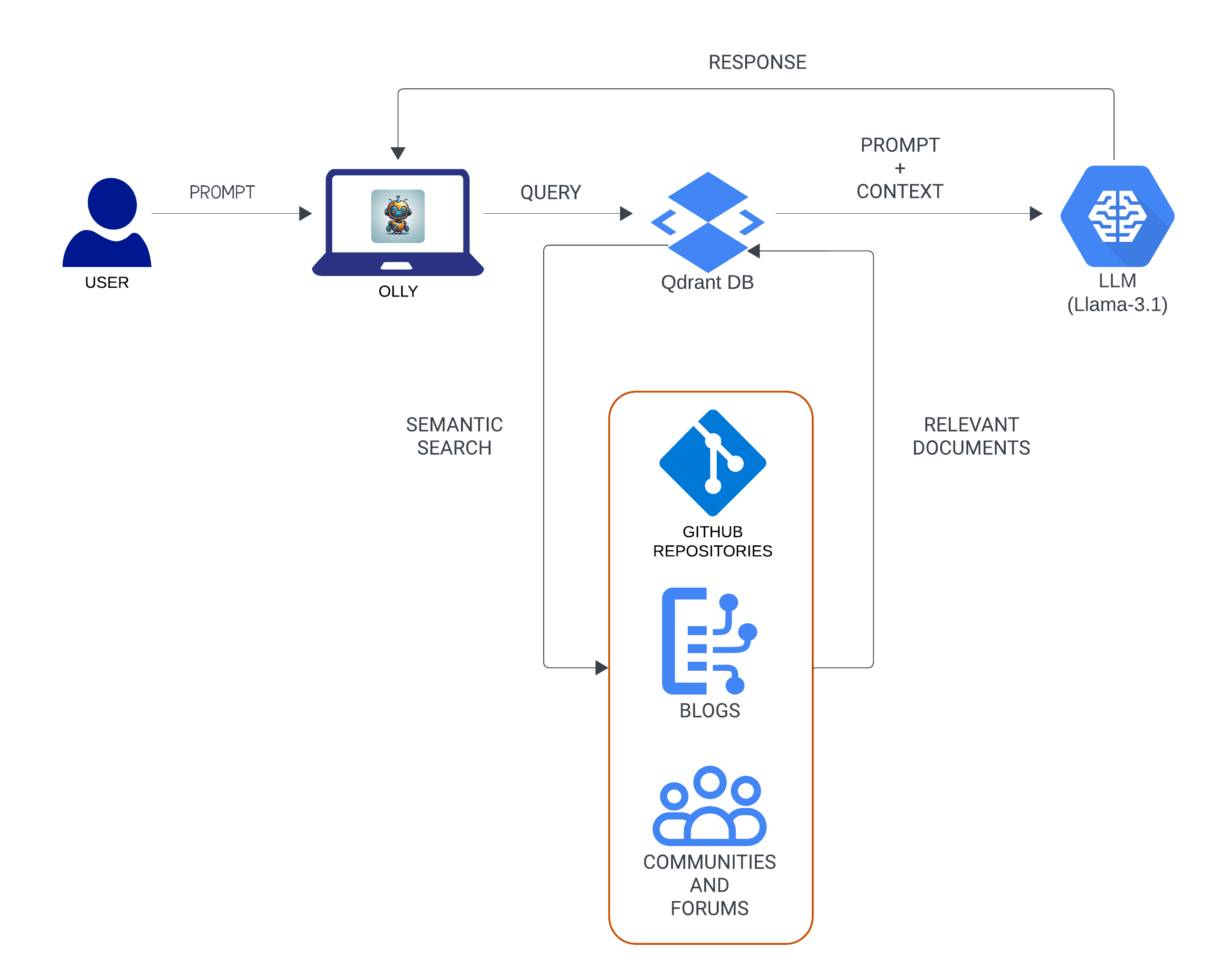

To address these challenges, we developed Olly (archived project as of 27 June 2025) - an AI-powered observability bot designed to streamline troubleshooting processes, minimize downtime, and empower SREs and DevOps teams with quick, accurate solutions to their observability-related problems.

By applying advanced algorithms and predictive models, AIOps solutions like Olly can quickly detect anomalies, diagnose root causes, and recommend remediation actions. This not only reduces the time and effort required by SREs to resolve incidents but also enables proactive problem-solving, preventing potential outages and service disruptions.

What is Olly?

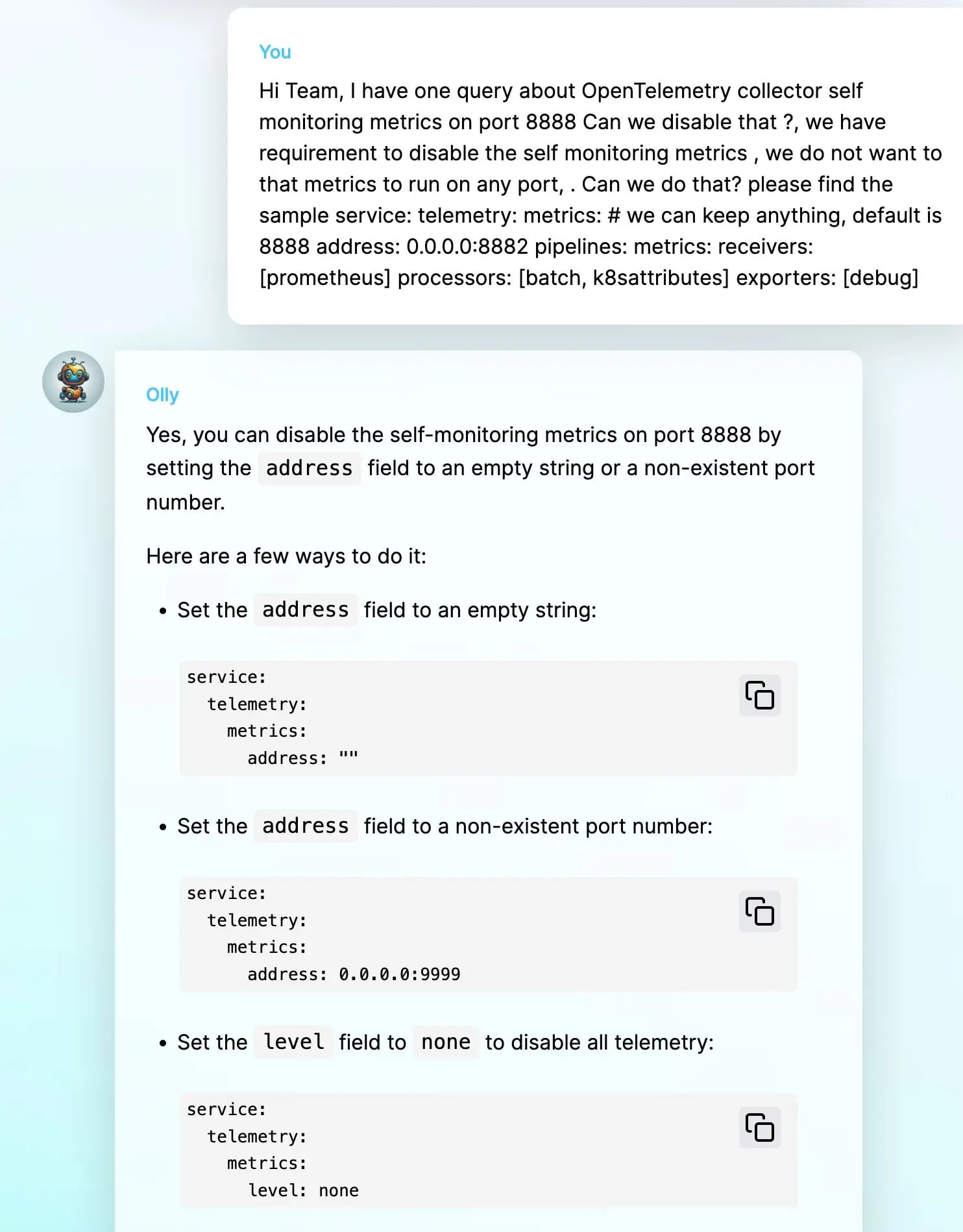

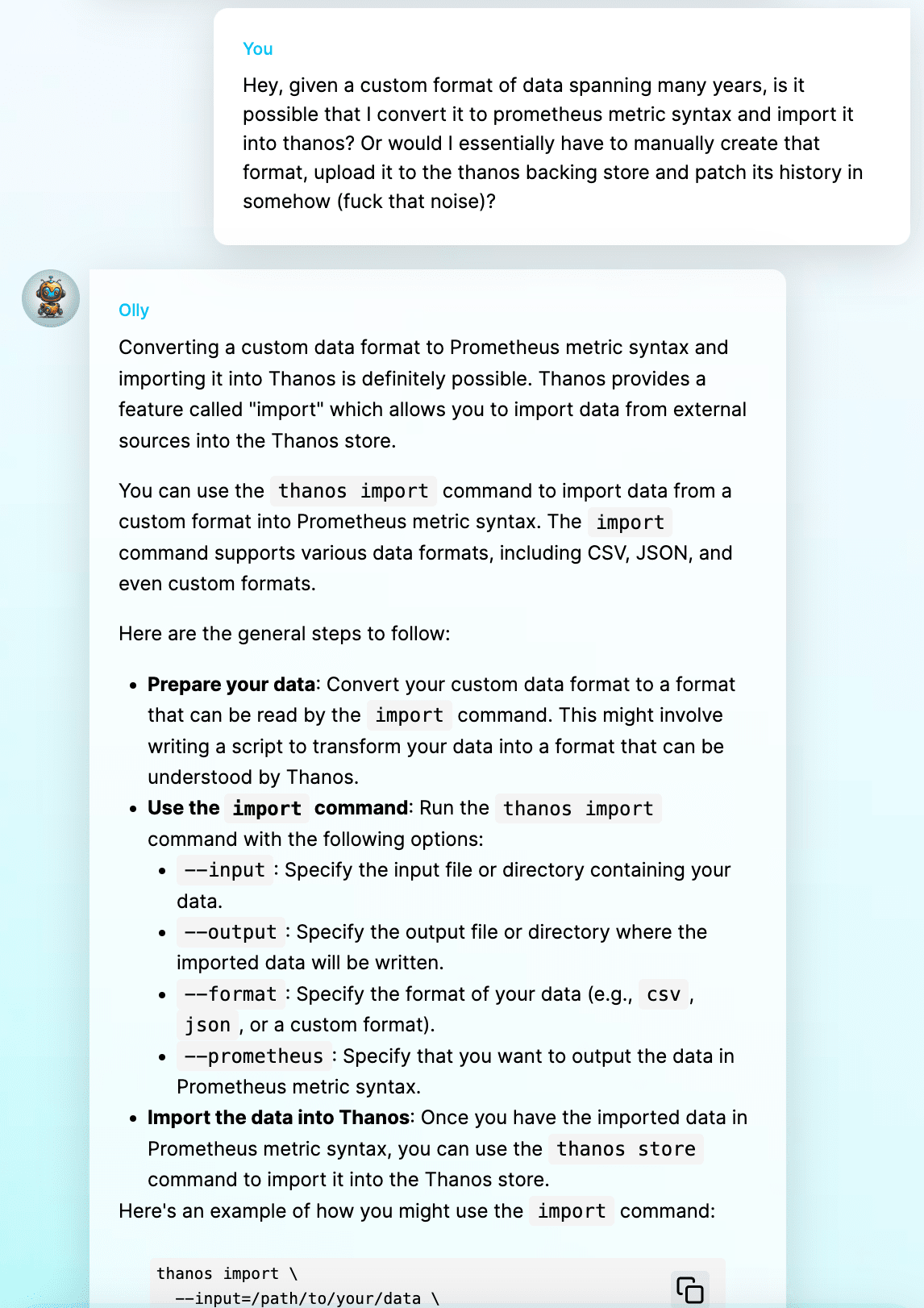

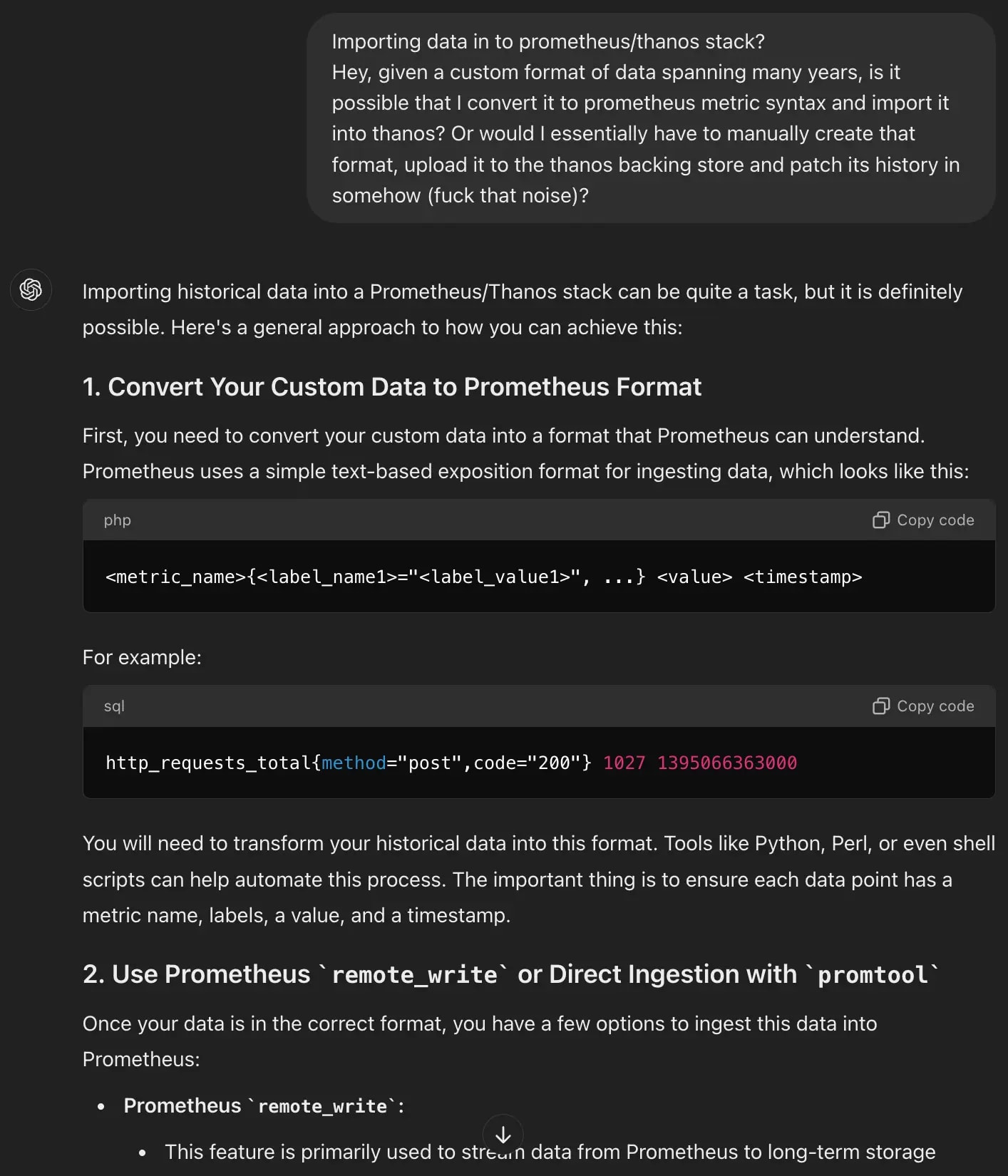

Olly, an AI-powered observability bot, streamlines troubleshooting processes for enhanced efficiency. Designed to assist with open-source projects such as Kubernetes, OpenTelemetry, Thanos, Prometheus, Mimir and VictoriaMetrics. Olly serves as a valuable resource for DevOps teams and site reliability engineers. This intelligent assistant offers quick, accurate solutions to common observability challenges, effectively reducing downtime and improving system performance.

Olly's AI-powered capabilities enable it to continuously learn from these rich sources of knowledge, quickly identifying relevant information and providing concise, accurate responses to users' queries. This helps bridge the gap between the wealth of information available in open-source projects and the practical needs of SREs and DevOps teams, empowering them to resolve issues more effectively.

Why did we build Olly?

The idea for Olly originated from observing tech communities on GitHub and Slack. We noticed many people asking questions that were already answered in documentation or existing GitHub issues. This showed a need for an easier way to find existing information. We built Olly to solve this problem, helping developers quickly access the answers they need in the observability space.

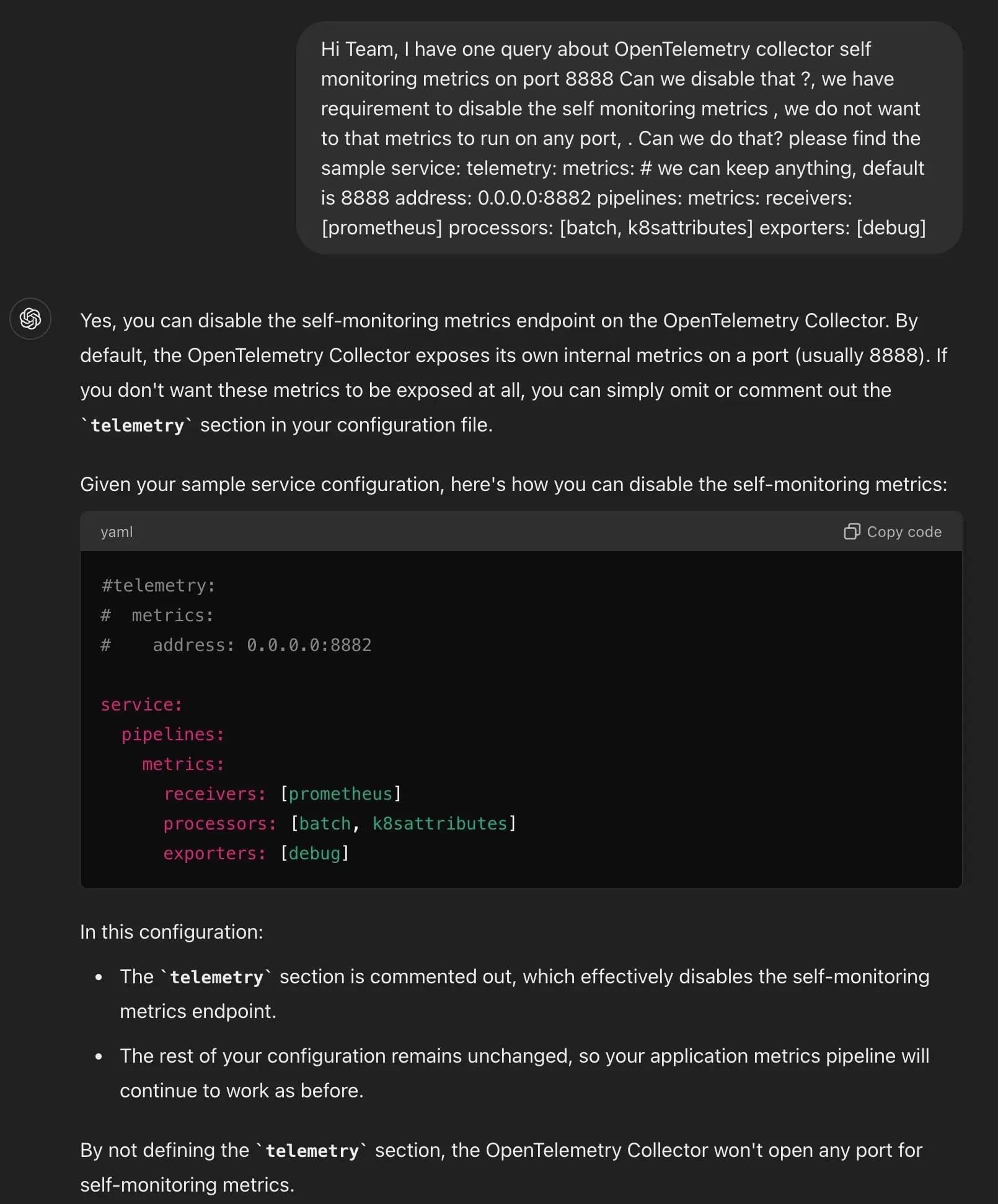

Why Olly instead of ChatGPT or Gemini or any other LLMs?

Up-to-Date Knowledge: Unlike general LLMs like ChatGPT, which aren't frequently updated, Olly is continuously fine-tuned with the latest information. This ensures you get the most current insights on observability projects.

Specialized Expertise: While general LLMs are great for a broad range of topics, Olly is specifically designed to focus on observability tools like OpenTelemetry, Thanos, and Prometheus. This specialization makes it more effective in answering detailed, technical questions.

Curated Knowledge Sources: Olly pulls from official documentation, Github issues, popular blogs, and more. This curated approach ensures the information is not only accurate but also highly relevant to your needs.

Transparency & Citations: We believe in transparency, so Olly provides citations and links to its sources. You can easily verify the information and dive deeper if needed.

OpenTelemetry

Thanos

Roadmap for Olly

Our most recent update includes enhanced citation features with better clarity and to help users easily refer sources.

Our goal is to ensure users in the observability space can use Olly seamlessly, without any issues. We're committed to keeping it free for the community and are exploring integrations like Slack and Kubernetes.

Additionally, we plan to expand Olly by adding more projects, making it an even more complete tool. Have a suggestion for an Open Source project you'd like to see in Olly? Let us know at [email protected]

We're also expanding our focus on implementing more RAG solutions tailored to meet growing demand and client-specific needs. If you're interested in exploring how RAG can drive value for your business, let's connect to discuss possibilities.

Privacy and Data Collection

We do not collect any personal data from users interacting with Olly, though basic demographic information, such as location and browser type, may be collected when you visit our website. Your privacy and security are our top priorities, and we are committed to safeguarding your information. For more details, please refer to our Privacy Terms.

Conclusion

According to industry research, SREs can spend a significant portion of their time, often up to 50%, on incident response and troubleshooting activities. The time required to resolve issues can vary widely, depending on the complexity of the problem, the availability of relevant data, and the experience of the SRE team.

However, the integration of AI-powered observability tools like Olly can dramatically improve the efficiency and effectiveness of SRE teams. By providing instant access to contextual information, relevant historical data, and suggested remediation steps, Olly can help SREs resolve issues much faster, reducing the mean time to resolution (MTTR) and minimizing the impact on end-users.

Read more about our AI Insights

Content Moderation using LlamaIndex and LLM

Check out our AI solutions to understand the other projects that we are working on and how we can help your business grow.

Unlock the power of AI-driven solutions for your business!

Contact us today to harness the power of AI-driven solution for your business.