Introduction

Optical Character Recognition (OCR) stands as a fundamental technology that transforms visual text representations into machine-readable formats. This capability is essential for digitizing printed documents and optimizing data entry processes. Advancements in artificial intelligence (AI) and machine learning (ML) have brought significant improvements to traditional OCR systems. These technologies enhance OCR's ability to accurately interpret text from complex or low-quality images by learning from data variations.

Looking to the future, OCR is on track for exciting developments. The technology is expected to integrate more seamlessly with other AI domains, such as natural language processing (NLP) and image recognition. This evolution will not only refine its core functionalities but also extend its utility across more sophisticated and holistic data processing solutions. Moreover, the scope of OCR applications is set to expand dramatically. Beyond mere text digitization, future applications may include real-time translation services, accessibility tools for the visually impaired, and interactive educational platforms. This broadening of scope will undoubtedly make OCR an even more vital component of our increasingly digital world.

Key Metrics for Evaluating OCR Systems

Evaluating the performance of OCR systems is crucial to ensure they meet the required accuracy and efficiency standards. Key metrics such as with application of Levenshtein Distance Character Error Rate (CER), Word Error Rate (WER) are measured. An advanced metric known as ZoneMapAltCnt also provides comprehensive insights into the performance of OCR systems.

Levenshtein Distance

Levenshtein Distance is a measure of the difference between two sequences. In the context of OCR, it quantifies how many single-character edits (insertions, deletions, or substitutions) are necessary to change the recognized text into the ground truth text.

Character Error Rate (CER)

Character Error Rate (CER) is a fundamental metric in OCR evaluation, representing the percentage of characters that were incorrectly recognized in a text document. It is calculated by comparing the recognized text to a ground-truth text and counting the number of insertions, deletions, and substitutions needed to make the recognized text identical to the ground truth.

Word Error Rate (WER)

Word Error Rate (WER) measures the performance of OCR systems at the word level. It is similar to CER but evaluates errors in terms of whole words instead of individual characters. WER is calculated by the number of word insertions, deletions, and substitutions required to match the recognized text with the ground truth.

ZoneMapAltCnt

The ZoneMapAltCnt metric represents a more advanced approach in evaluating OCR systems. It assesses both the accuracy of text segmentation and the correctness of the recognized text within those segments. This metric evaluates the precision of detected text zones and measures character and word accuracy within these zones. By handling segmentation errors effectively.

Factors Affecting OCR Accuracy

Several factors influence the accuracy of OCR systems:

Traditional methods of content moderation, such as manual review, keyword filtering, and rule-based systems, pose several limitations in effectively managing large volumes of data.

- Document Condition: Poor quality or damaged documents can significantly reduce OCR accuracy due to obscured or unreadable text.

- Image Resolution: Higher resolution images provide more detail, allowing for better character recognition

- Document Language: OCR systems must be optimized for specific languages, as character sets and linguistic rules vary widely.

- Preprocessing: Techniques such as noise reduction, binarization, and normalization improve text readability and OCR accuracy.

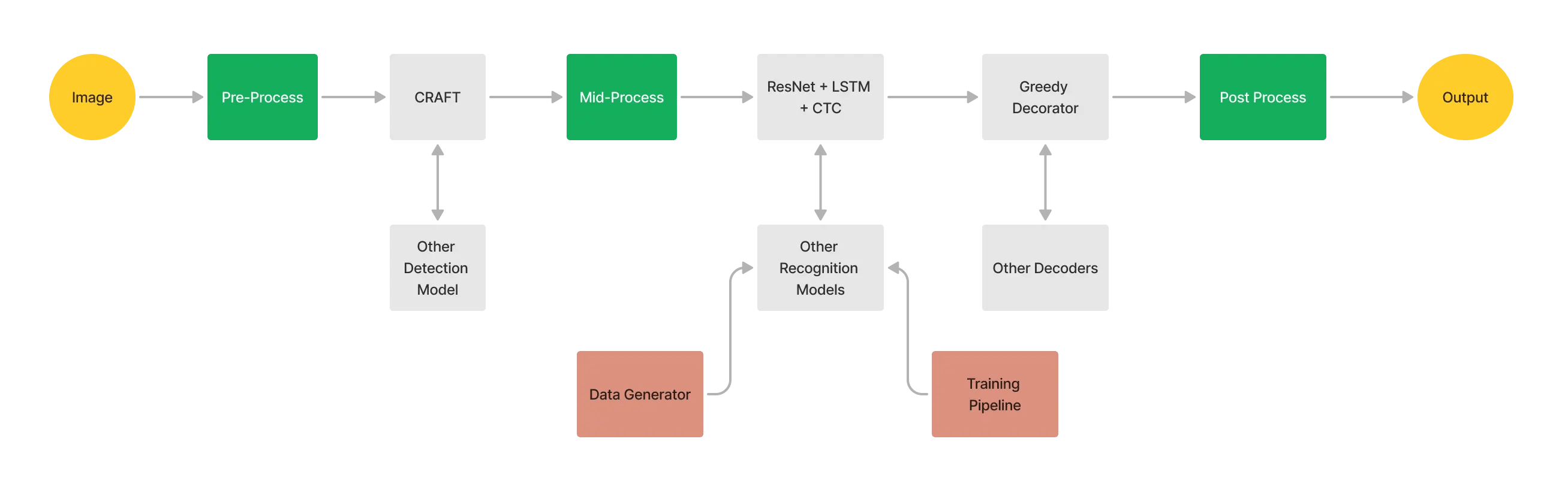

Framework For an OCR Model

Preprocessing Images for OCR

Opening an Image

import cv2

image_file = "PATH"

img = cv2.imread(image_file)

PythonInverting Image

Inverting an image in the context of OCR refers to reversing the color scheme of the image to enhance the text's readability and contrast for better recognition accuracy.

inverted_image = cv2.bitwise_not(img)

cv2.imwrite("temp/inverted.jpg", inverted_image)

PythonHere, OpenCV handles this for us and we write the image in "temp" folder for further analysis.

Binarization

Binarization in the context of OCR is a crucial preprocessing step that involves converting a color or grayscale image into a binary image. This binary image consists of only two colors— typically black and white. This step becomes important because most OCR models are designed to handle this kind of format.

def grayScale(image):

return cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

gray_image = grayScale(img)

thresh, im_bw = cv2.threshold(gray_image, 200, 230, cv2.THRESH_BINARY)

cv2.imwrite("temp/bw.jpg", im_bw)

PythonNoise Removal

Noise removal is a critical preprocessing step in OCR because it enhances the quality of the input images, leading to more accurate text recognition.

import numpy as np

def noiseRemoval(image):

kernal = np.ones((1,1), np.uint8)

image = cv2.dilate(image, kernal, iterations=1)

image = cv2.erode(image, kernal, iterations=1)

image = cv2.morphologyEx(image, cv2.MORPH_CLOSE, kernal)

image = cv2.medianBlur(image, 3)

return image

no_noise = noiseRemoval(im_bw)

cv2.imwrite("temp/no_noise.jpg", no_noise)

PythonDilation and Erosion

In OCR, the processes of dilation and erosion play pivotal roles in improving the readability and recognition accuracy of text. Dilation helps to enhance the visibility of characters by thickening them, thus aiding in better character recognition in low-quality or faint prints. Conversely, erosion is used to thin out characters, which prevents misinterpretations and enhances the separation of text from the background.

import numpy as np

#Erosion

def thin_font(image):

image = cv2.bitwise_not(image)

kernel = np.ones((1,1), np.uint8)

image = cv2.erode(image, kernel, iterations=1)

image = cv2.bitwise_not(image)

return image

#Dilation

def thick_font(image):

image = cv2.bitwise_not(image)

kernel = np.ones((2,2), np.uint8)

image = cv2.dilate(image, kernel, iterations=1)

image = cv2.bitwise_not(image)

return image

eroded_image = thin_font(no_noise)

dilate_image = thick_font(no_noise)

cv2.imwrite("temp/eroded_image.jpg", eroded_image)

cv2.imwrite("temp/dilate.jpg", dilate_image)

PythonDilation and Erosion processes work only when the image is inverted - background is black and text is white.

Best OCR Models for Different Use Cases

1. Amazon Textract

- Use Case: Industry Level

- Strength: Amazon Textract is highly effective for industrial-scale document processing, capable of extracting text and data from virtually any type of document, including forms and tables. It integrates seamlessly with other AWS services, making it ideal for businesses looking to automate document workflows in cloud environments.

- Amazon Textract

2. Surya OCR

- Use Case: Large Language Range Support

- Strength: SuryaOCR stands out for its extensive language support, making it suitable for global applications where documents in multiple languages need to be processed. This makes it a valuable tool for international organizations and government agencies dealing with multilingual data.

- Surya OCR

3. Tesseract

- Use Case: Customizable and Versatile

- Strength: Tesseract is an open-source OCR engine that offers flexibility and customization, which is perfect for developers looking to integrate OCR into their applications without significant investment. Its versatility makes it a popular choice for academic research, prototype development, and small business applications.

- Tesseract

4. Easy OCR

- Use Case: Good for Small and Simple Projects

- Strength: EasyOCR is an accessible and straightforward tool for developers who need a quick and efficient solution for small-scale projects. It supports multiple languages and is easy to set up, making it ideal for startups and individual developers working on applications with less complex OCR requirements.

- Easy OCR

Other OCR Use Cases

- Automated Form Processing

- Digital Archiving

- License Plate Recognition

- Legal Document Analysis

- Educational Resources

Demo on Surya-OCR

Overview of Surya OCR

Surya OCR is a comprehensive document OCR toolkit. This toolkit is designed to handle a wide range of document types and supports OCR in over 90 languages, benchmarking favorably against other leading cloud services. They have a hosted API as well.

Key Features:

- Multilingual Support: Capable of performing OCR in more than 90 languages, making it highly versatile for global applications.

- Advanced Text Detection: Offers line-level text detection capabilities, which work effectively across any language.

- Sophisticated Layout Analysis: Detects various layout elements such as tables, images, headers, etc., and determines their arrangement within the document.

- Reading Order Detection: Identifies and follows the reading order in documents, which is crucial for understanding structured data like forms and articles.

- Surya also offers performance tips for optimizing GPU and CPU usage during OCR processing, ensuring efficient handling of resources, unlike Tesseract.

Usage

- Surya is particularly adept at handling complex OCR tasks such as processing scientific papers, textbooks, scanned documents, and even mixed-language content efficiently.

- The toolkit is available through a hosted API that supports PDFs, images, Word documents, and PowerPoint presentations, ensuring high reliability and consistent performance without latency spikes.

- Surya can be installed via pip and requires Python 3.9+ and PyTorch. The model weights download automatically upon the first run.

- It includes a user-friendly Streamlit app that allows for interactive testing of the OCR capabilities on images or PDF files.

In this demonstration, we will explore how to perform Text Detection, OCR, Reading Layout, and Reading Order using Surya. We will cover three methods: using the Streamlit GUI, through the Command Line Interface, and directly from Python code.

Surya OCR Through GUI

To run Surya OCR GUI locally on your machine, you will need to open your Command Line Interface (CLI) and follow the given instructions:

pip install Streamlit

bashAfter successfully installing streamlit, execute below snippet in CLI

surya_gui

bash

The app will start running on http://localhost:8501

Above dashboard will be displayed if all the steps above are executed successfully. Now just follow the steps:

- Click on Browse File Button

- Select your desired file and language.

- Choose one between:

- Run Text Detection

- Run OCR

- Run Layout Analysis

- Run Reading Order

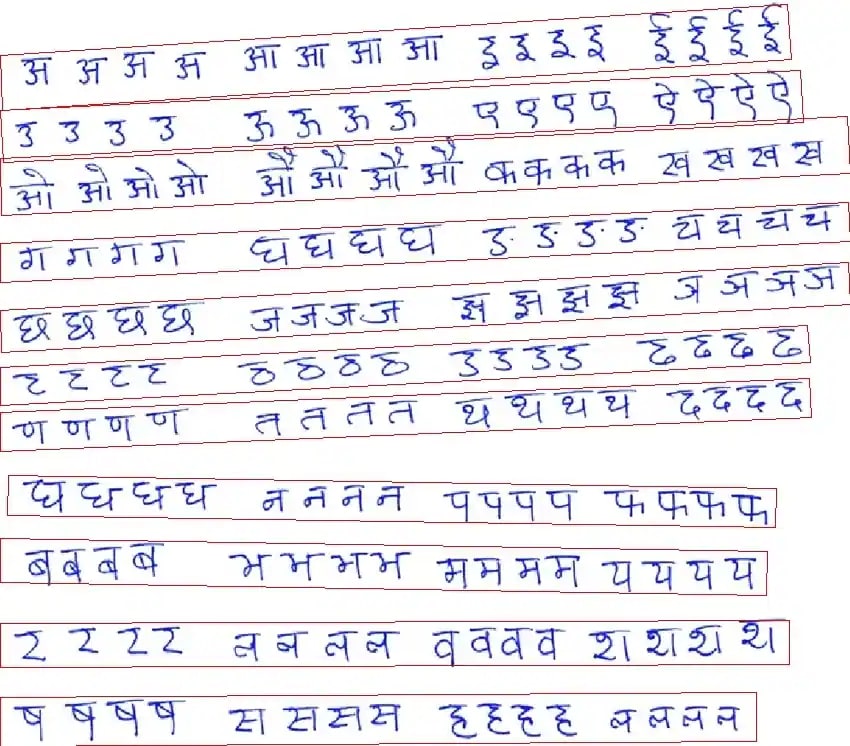

All the images from this point are processed by Surya OCR

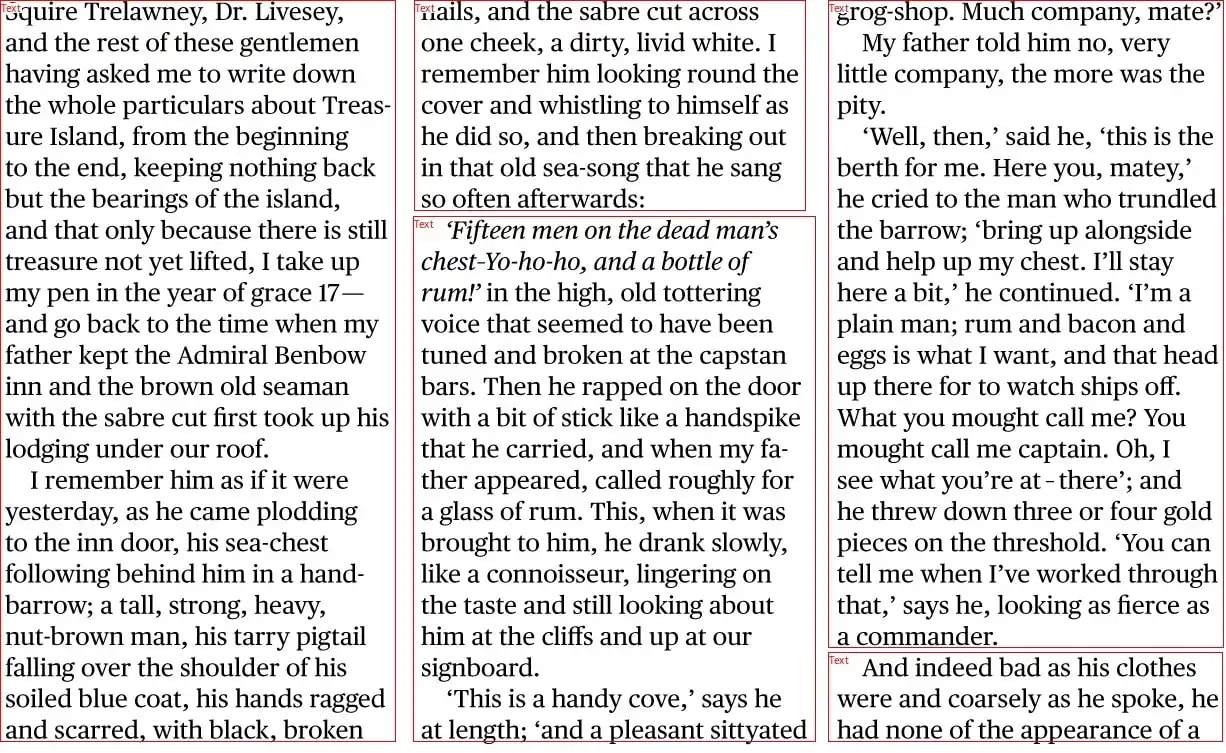

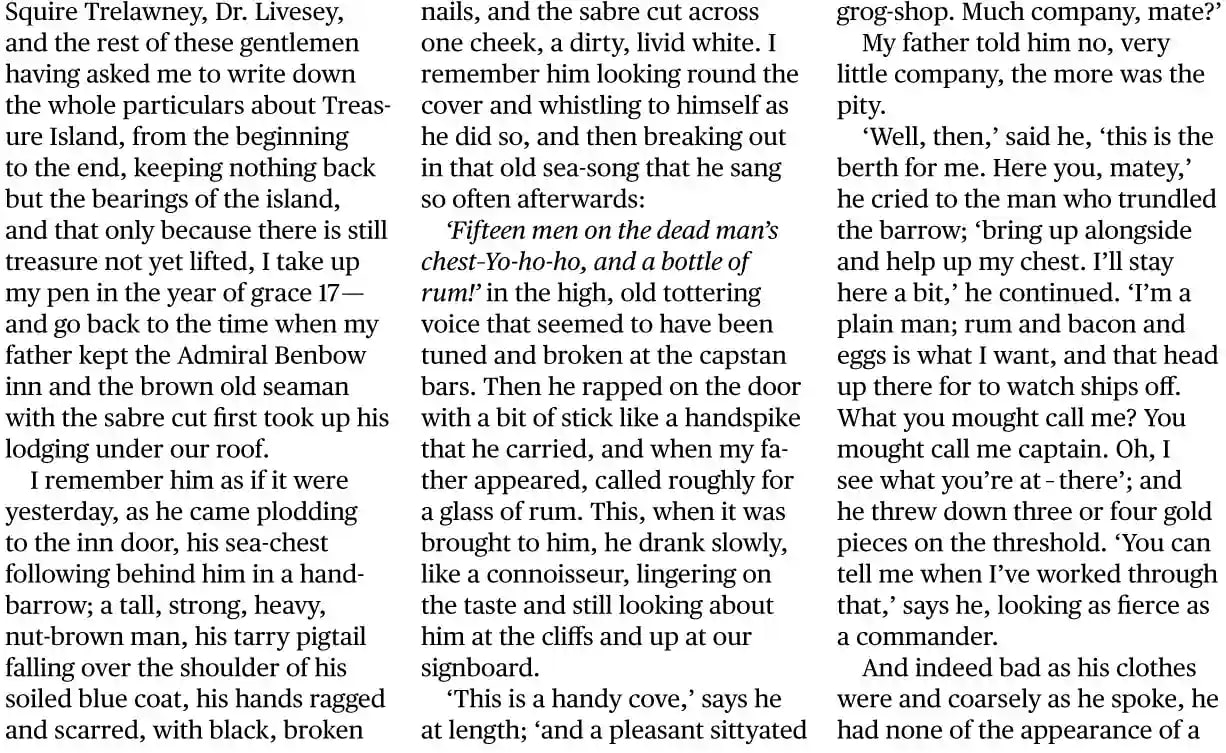

Text Detection

- Identifies areas within an image or document where text is present. This step involves locating text blocks and distinguishing them from non-text elements like images and backgrounds.

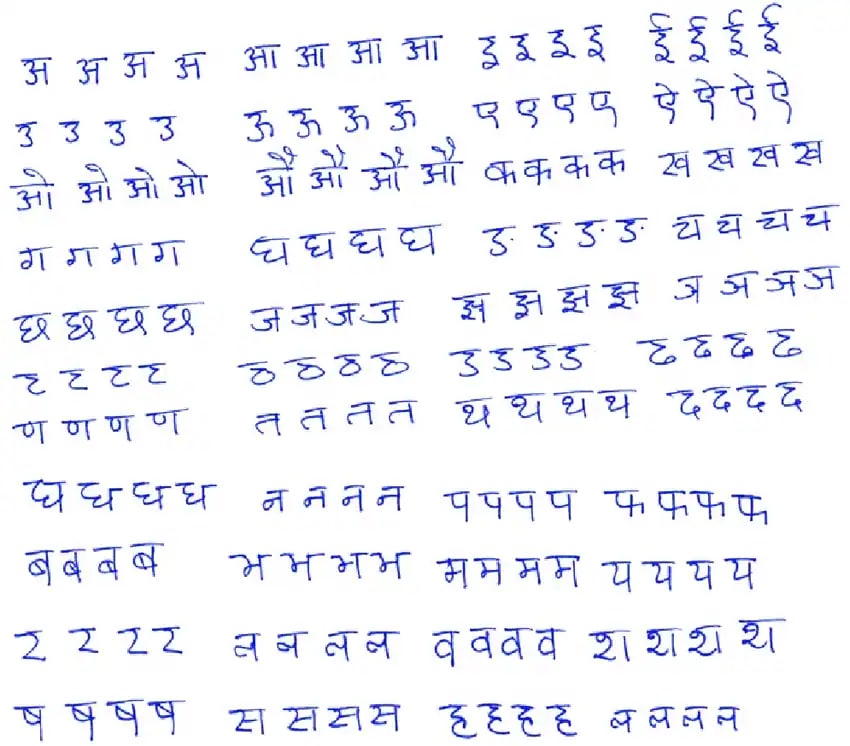

OCR (Optical Character Recognition)

- Transforms the detected text areas into machine-readable characters. This process involves analyzing the shapes of characters and converting them into corresponding text data.

- Note that here, we have used an inverted image as uploaded file.

Reading Layout

- Analyzes the physical structure of the document to understand how different elements are organized. This includes the detection of headers, footers, columns, tables, and images, helping to interpret the document as a whole rather than just isolated text blocks.

Reading Order

- Determines the sequence in which the text should be read to maintain logical coherence. This is crucial for documents with complex layouts, such as multiple columns or pages with sidebars, ensuring that the output text follows the intended flow of information.

- Note the numbers on the top left region of the layout boxes.

Using Surya OCR via Command Line

Text Recognition

- Open a Command Prompt: Navigate to the folder containing the images you wish to process.

- Execute Surya OCR: Type the following command to process your images using Surya OCR. Replace

DATA_PATHwith the path to your images relative to your current directory. This command will output the recognized text in the "results" folder.

surya_ocr DATA_PATH --images --langs hi,en

In the command above, specifies the languages for OCR. "en" represents English. Surya OCR supports up to 90 different ISO language codes. For a complete list of supported languages, refer docs here.

Similarly for,

Text Line Detection

surya_layout DATA_PATH --images

Reading Order

surya_order DATA_PATH --images

It should be noted that the results obtained will be the same as that presented above in this article.

Using Surya OCR from Python

Sometimes the images on which we want to apply OCR may not be upto the mark, need some preprocessing techniques as discussed earlier in this article, improvements etx. So we need to create a pipeline through which our image processes.

This can be easily done in python by combining various functions and then applying Surya OCR on the processed document.

pip install surya-ocr

PythonText Recognition

This Python script utilizes the surya.ocr library to perform optical character recognition (OCR) on images. The script:

- Loads an image for OCR.

- Initializes necessary models and processors for text detection and recognition.

- Executes the OCR process on the image, returning text predictions.

- To use the segformer, use version 0.4.14 of Surya as in the latest update the file is missing.

from PIL import Image

from surya.ocr import run_ocr

from surya.model.detection import model

from surya.model.recognition.model import load_model

from surya.model.recognition.processor import load_processor

image = Image.open(IMAGE_PATH)

langs = ["en"]

det_processor, det_model = model.load_processor(), model.load_model()

rec_model, rec_processor = load_model(), load_processor()

# Perform OCR and get predictions

predictions = run_ocr([image], [langs], det_model, det_processor, rec_model, rec_processor)

PythonLine Detection

This segment of the code focuses on detecting textual lines within an image:

- It loads an image and uses the

surya.detectionmodule. - Applies a text detection model to find textual lines.

- Outputs a list of dictionaries containing detected text lines for further processing.

from PIL import Image

from surya.detection import batch_text_detection

from surya.model.detection.model import load_model, load_processor

image = Image.open(IMAGE_PATH)

model, processor = load_model(), load_processor()

# Get predictions of text lines

predictions = batch_text_detection([image], model, processor)

PythonLayout Analysis

This script analyzes the layout of the page within an image:

- Loads an image and initializes models for both line detection and layout analysis.

- First, detects text lines, then performs layout analysis based on these lines.

- Returns structured data indicating the layout of content in the image.

from PIL import Image

from surya.detection import batch_text_detection

from surya.layout import batch_layout_detection

from surya.model.detection.model import load_model, load_processor

from surya.settings import settings

image = Image.open(IMAGE_PATH)

det_model, det_processor = load_model(), load_processor()

model, processor = load_model(checkpoint=settings.LAYOUT_MODEL_CHECKPOINT), load_processor(checkpoint=settings.LAYOUT_MODEL_CHECKPOINT)

# First detect lines, then analyze layout

line_predictions = batch_text_detection([image], det_model, det_processor)

layout_predictions = batch_layout_detection([image], model, processor, line_predictions)

PythonReading Order

This code snippet establishes the reading order within a document:

- Loads an image and extracts bounding boxes (bboxes) of detected text elements.

- Utilizes the

surya.orderingmodule to determine the sequential order of text blocks. - Outputs ordered text predictions to guide further content analysis or extraction.

from PIL import Image

from surya.ordering import batch_ordering

from surya.model.ordering.processor import load_processor

from surya.model.ordering.model import load_model

image = Image.open(IMAGE_PATH)

bboxes = [bbox1, bbox2, ...]

model, processor = load_model(), load_processor()

# Get ordered text predictions

order_predictions = batch_ordering([image], [bboxes], model, processor)

PythonLike text detection, this function returns structured data with coordinates and descriptions of various layout components, organized in a way that reflects the physical structure of the page.

For a deeper dive into Surya-OCR, an advanced OCR system, enthusiasts and developers can explore its extensive components on GitHub. This open-source project is readily accessible for those eager to understand its mechanics or contribute to its evolution. Visit Surya-OCR on GitHub to explore the documentation, source code, and more.

Limitations of Surya-OCR and Scope of Improvement

Surya-OCR stands out for its impressive multilingual support and specialization in digitizing printed documents. Despite its strengths, there are a few limitations users should be aware of. Primarily, Surya-OCR is optimized for printed text and can struggle with text on complex backgrounds or in handwritten formats, potentially leading to inaccuracies.

Additionally, the toolkit requires substantial GPU resources for optimal performance, with recommendations like 16GB of VRAM for batch processing. This high demand may exclude users with limited hardware capabilities. Also, issues with the confidence levels in the model's text detection could affect its reliability, especially in critical applications where accuracy is paramount.

Optical Character Recognition (OCR) technology has made significant strides, evolving from simple text digitization to becoming an integral part of complex AI-driven applications. This evolution can be further enhanced by the integration with multimodal Large Language Models (LLMs), which are capable of processing and understanding information from multiple data types, including text, images, and audio.

Multimodal LLMs can complement traditional OCR systems in several ways. While OCR excels at extracting raw text from images, multimodal LLMs can interpret the context within which the text appears, understanding nuances and subtleties that OCR alone might miss. This synergy allows for a more nuanced understanding of documents in contexts where text is intertwined with visual elements, such as infographics, annotated diagrams, and mixed media documents.

For example, in educational materials where diagrams are annotated with textual explanations, OCR can extract the text, and the multimodal LLM can provide insights into how the text relates to the graphical content. This could be invaluable for creating accessible educational tools, where both text and visuals need to be made comprehensible to users with different needs.

Conclusion

In my opinion, OCR has transcended its traditional role, enhanced by advancements in AI and ML, to become a cornerstone technology in our digital era. As it integrates further with fields like NLP and image recognition, OCR is expanding into dynamic applications such as real-time translation and accessibility tools, transforming how we interact with information. But there is still much to be done in the field.

Multimodal Large Language Models (LLMs) represent a promising evolution in OCR technology. By combining OCR with these models, we can extract not just text but understand the context of images, making digital content more accessible and interpretable.

As we continue to refine these technologies, the potential for creating seamless and intuitive user interfaces that can interpret and respond to a complex blend of textual, visual, and auditory inputs is immense. This could revolutionize the way we interact with our devices, making technology an even more integral part of everyday life.

Revolutionize your document processing with our cutting-edge OCR solutions.

Contact us today to harness the power of AI-driven text recognition for your business.