Introduction

The rise of OLAP databases has been hard to ignore, with the relentless growth of data and demand for powerful, fast analytics tools. OLTP databases have been invaluable for transaction-heavy applications but often fall short when faced with the sheer complexity of modern analytical workloads. Enter OLAP databases, designed to make slicing through massive datasets feel nearly effortless.

In exploring OLAP solutions, we found two that stood out: ClickHouse and DuckDB. While both are OLAP-focused, they’re fundamentally different tools, each with unique strengths. ClickHouse is a powerhouse designed for multi-node, distributed systems that scale up to petabytes of data. DuckDB, on the other hand, is more like the SQLite of OLAP—a nimble, desktop-friendly database that brings OLAP capabilities to local environments without the need for elaborate setup. Despite their differences, these databases share a versatility that makes them adaptable to a range of tasks: querying data in object storage, handling cross-database queries, and even parsing compressed files or semi-structured data.

This article will mainly focus on the capabilities of DuckDB -- while touching on ClickHouse and its key differences which makes both the projects great in their own niche. We have covered ClickHouse in much detail here.

DuckDB: Speedy Analytics, Zero Setup

DuckDB is a welcome solution for data analysts and scientists seeking efficient, local OLAP processing without the usual infrastructure demands. DuckDB has carved out a unique niche as a lightweight relational database, built to perform analytical tasks with impressive speed while remaining incredibly easy to use.

For those of us who are accustomed to the high costs of platforms like Redshift, Databricks, Snowflake, or BigQuery, DuckDB offers a refreshing alternative. DuckDB allows you to simply upload files to cloud storage, enabling teams to use their existing laptops compute to perform analytics, bypassing the need for expensive and complex infrastructure for smaller tasks and analysis.

Personally, we use DuckDB as a go-to solution for handling tasks that exceed the capacity of tools like Pandas or Polars. Its ability to load large CSVs directly into dataframes with speed and efficiency has streamlined my workflows. It performs well for ETL tasks in Kubernetes environments, showcasing its adaptability and reliability across a wide range of data processing needs.

ClickHouse: Analytics for Scale

Then there's ClickHouse, a database built for scale, known for its incredible speed and efficiency when working with vast amounts of data. ClickHouse’s column-oriented architecture, coupled with unique table engines, enables it to process millions of rows per second. Companies like Cloudflare use it to cut down memory usage by over four times, highlighting its role in real-world, large-scale applications. In environments where data volumes are measured in terabytes or petabytes, ClickHouse really shines.

One of the standout aspects of ClickHouse is its ability to leverage the full power of the underlying hardware, optimizing memory and CPU usage to handle massive, complex queries with ease. The distributed nature of ClickHouse allows it to scale horizontally across nodes, making it resilient and highly available for mission-critical applications. Its real-time query capabilities allow companies to power dashboards and interactive reports with minimal latency, providing instant insights for fast-paced decision-making. For anyone managing large-scale analytics, it delivers a robust set of features for everything from web analytics to detailed log analysis.

Similarities and Differences between ClickHouse and DuckDB

While both ClickHouse and DuckDB excel in fast, efficient querying and share similar columnar architectures, their design philosophies and deployment models cater to unique needs. Together, these tools showcase the spectrum of options available for handling analytical workloads, from enterprise-scale, distributed systems to flexible, embedded analytics.

ClickHouse: Enterprise-Grade, Big Data Workloads

ClickHouse is designed for large-scale analytics, widely adopted by enterprises handling vast datasets for real-time analytics, monitoring, and business intelligence. Built to handle multi-node deployments, ClickHouse scales effectively with its Massively Parallel Processing (MPP) architecture, making it a strong choice for multi-terabyte, distributed, cloud-first deployments.

DuckDB: Small-to-Medium Data and Data Science Workflows

DuckDB is ideal for small-to-medium datasets and data science tasks. It’s lightweight, embedded, and designed to run directly on local machines with minimal configuration. This makes it perfect for data exploration and prototyping on tens-of-gigabyte datasets without needing a complex database setup.

Installation and Embedding: ClickHouse’s chDB and DuckDB’s In-Process Model

DuckDB is completely embedded—no server setup is needed, making it easily deployable within the same process as the host application. ClickHouse offers similar ease with chDB, a library that allows ClickHouse SQL queries to be run directly in Python environments, providing a streamlined setup for local analytics.

In-Memory and Serialization Capabilities

Both databases support in-memory processing, though they differ in approach. DuckDB can operate in-memory by default for fast, temporary analyses, while ClickHouse also provides in-memory storage options through specific storage engines. For data serialization to flat files, ClickHouse is generally faster, thanks to its optimized storage architecture.

Performance on Complex Computations and DataFrame Integration

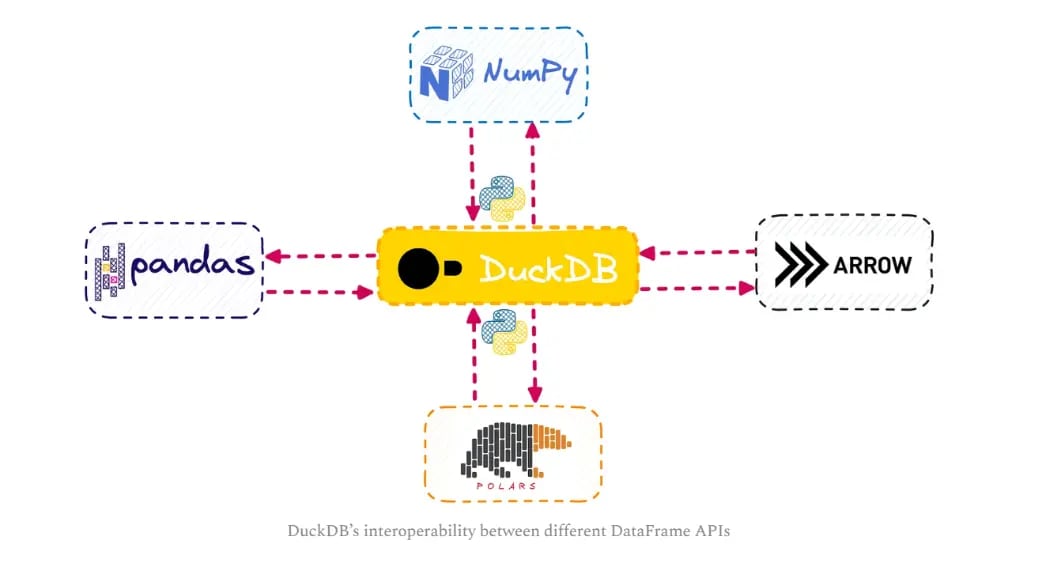

DuckDB excels at handling complex relational data operations, often providing faster local analysis for structured datasets. Its seamless querying of Pandas, Polars and Arrow Dataframes within Python is a key feature that makes it highly useful for data scientists, serving as a powerful in-process SQL engine.

Distributed Scaling vs. Local, Serverless Execution

ClickHouse’s MPP architecture allows for horizontal scaling across nodes, ideal for enterprise-grade, cloud-based analytics workloads. DuckDB, meanwhile, thrives in serverless, single-machine setups and is perfect for tasks like ETL, semi-structured data queries, and quick analyses on local storage.

In Summary,

| Feature | ClickHouse | DuckDB |

|---|---|---|

| Database Type | Column-oriented, OLAP | Column-oriented, OLAP |

| Primary Use Case | Enterprise-grade, big data workloads | Small-to-medium data volumes; data science workflows |

| Adoption | Widely used in large enterprises for high-speed analytics | Popular among data analysts and scientists |

| Deployment | Multi-node, distributed (supports MPP architecture) | Single-machine, embedded (runs in-process) |

| Installation Requirement | Server software typically required (chDB for local SQL) | No server installation needed; embedded within host |

| Data Volume Scale | Suited for multi-terabyte, large datasets | Ideal for tens of GB-level datasets |

| In-Memory Processing | Supported through specific storage engines | Supported with “:memory:” mode (default) |

| Serialization Performance | Faster for serializing flat file data | Slower than ClickHouse for serialization |

| Complex Query Performance | Strong for large-scale aggregation and distributed tasks | Excels with complex computations on relational schema |

| DataFrame Integration | Available via chDB in Python | Directly supports Pandas, Polars, and Arrow |

| Scalability | Highly scalable across multiple nodes | Limited to single-machine; serverless, embedded use |

| Best For | Big data analytics, enterprise BI, web analytics | Local data exploration, data prototyping, ETL tasks |

Performance Comparison: ClickHouse vs. DuckDB

When it comes to high-performance analytical databases, both ClickHouse and DuckDB have unique strengths and limitations.

General Performance Overview

-

ClickHouse generally outperforms DuckDB for larger data volumes and relatively straightforward queries. This strength can be attributed to ClickHouse's columnar storage, distributed nature, and optimizations for large-scale data processing, which allow it to efficiently manage and retrieve massive datasets.

-

DuckDB however, is highly optimized for in-memory data processing and excels at handling complex analytical queries on single-node setups. DuckDB's ability to work seamlessly in-memory allows it to execute queries quickly for moderate data sizes without needing the distributed setup that ClickHouse typically requires for peak performance.

-

Factors Affecting Performance The structure and complexity of the data (like normalized vs. denormalized tables) and query complexity also impact performance for both databases. For instance, ClickHouse performs best with denormalized data, while DuckDB handles normalized data more effectively, especially in analytical tasks.

DuckDB's Strengths and Limitations

DuckDB’s limitations resemble those of other single-node query engines like Polars, DataFusion, and Pandas Although it’s often compared to Spark, it’s a bit of an “apples to oranges” comparison due to Spark’s multi-node, distributed setup. DuckDB, Polars, and similar engines are more suitable for fast, single-node analytics and don’t scale out to multiple nodes like Spark or ClickHouse.

For example, in a recent benchmark involving a huge dataset, DuckDB excelled at in-memory querying. This left us impressed and somewhat surprised, given the common praise for ClickHouse’s speed and efficiency in handling large datasets.

Observations on ClickHouse’s Speed with Memory-Table Engine

ClickHouse avoids disk I/O, decompression, and deserialization. This kind of performance is advantageous when dealing with high-speed requirements and straightforward query patterns.

However, the complexity of queries — especially analytical ones like TPC-DS benchmarks—can challenge ClickHouse’s performance, as it relies heavily on denormalized data for speed. Our test, seemed to amplify the impact of query complexity on ClickHouse’s performance. If anything, this reinforces the need to tailor the setup and data structure based on use case requirements, particularly when handling ClickHouse in scenarios it’s not fully optimized for.

Key Advantages of DuckDB

As discussed earlier, DuckDB stands out for several key advantages, making it a valuable tool for data analysis.

Dependency-Free, Single Binary Deployment

One of the biggest selling points of DuckDB is its minimalist approach to installation. Unlike other databases that require complex setups, DuckDB can be deployed as a single binary with no external dependencies. This makes it incredibly easy to get started with, as there’s no need to configure or maintain a separate database server. You can run it directly within your local environment or even integrate it into your existing workflows with minimal effort.

Querying Data Directly

What sets it apart is its ability to query these Dataframes directly using SQL. This means you can interact with your data in a familiar python-based environment and use the full power of SQL for your analysis, without having to worry about converting between formats or loading the entire dataset into memory. It's like running SQL queries directly on your existing data structures, streamlining your workflows.

Filling the Gap Between Traditional Databases and Data Science Workflows

DuckDB bridges the gap between traditional database management systems and the fast-paced, iterative work often done in data science. For many data scientists, working with large datasets typically means turning to complex and heavyweight systems like PostgreSQL or even Spark. DuckDB, however, offers a simpler, more lightweight alternative while still providing the power of SQL-based analytics. It enables analysts to perform complex queries directly on datasets, whether they're stored locally or in the cloud, without the overhead of setting up a full-fledged database system.

Cost-Effective Analytics: Using DuckDB with Parquet Files on GCS

DuckDB’s cost-effectiveness is becoming a major selling point for companies looking to reduce their cloud analytics costs. For instance, many teams are turning to DuckDB to perform analytics on Parquet files stored in Google Cloud Storage (GCS), rather than using more expensive solutions like BigQuery. BigQuery’s costs can add up quickly with frequent analytical queries, whereas DuckDB enables a "bring-your-own-compute" model, allowing users to leverage their local machines to process cloud data without incurring heavy charges. This makes DuckDB an attractive alternative for data teams looking to cut down on operational costs while still performing powerful analytics.

Efficient Data Handling Without Full Data Loading

One of the key benefits of DuckDB over tools like SQLite or Pandas is its ability to process data without loading the entire file into memory. While Pandas requires the full dataset to be loaded before any analysis can be done, DuckDB allows you to copy compressed data directly into memory, bypassing the need to load everything at once. This not only saves memory but also makes DuckDB more efficient when dealing with large files or datasets.

Enhancing Data Science Workflows: DuckDB and Polars

While Polars is known for its performance in data manipulation, DuckDB offers a unique advantage by being a full-fledged database. DuckDB can read data from a Polars DataFrame without any manual conversion, allowing you to work with data in both systems seamlessly. You can process data in Polars, then pass it to DuckDB for further SQL-based operations, and even save the results directly to the DuckDB database—all without the need for manual copying or reformatting. This smooth integration significantly enhances productivity and streamlines workflows for data scientists.

SQL Support with Advanced Features

Another key advantage of DuckDB is its SQL dialect, which we find to be incredibly powerful. It supports advanced features like macros, which allow for more flexible and reusable queries. This is especially useful for data scientists who need to run complex queries and streamline their analysis. DuckDB also has a functional interface, which means you can work with data in a way similar to Spark or Pandas, but with the power of SQL under the hood. This hybrid approach allows you to transform and manipulate data efficiently, combining the best aspects of both worlds.

The appeal of DuckDB becomes even clearer when considering the limitations that existed before it. Previously, working with smaller datasets locally was manageable with formats like CSV or Parquet, but as data size increased, the process grew challenging. Setting up traditional databases like MySQL or PostgreSQL for these mid-sized tasks was cumbersome, and distributed systems like Spark felt excessive for datasets that didn’t require that scale. DuckDB fills this gap, allowing small-to-medium datasets to be processed locally, without the need for complex database setups.

In modern data analysis, data must often be combined from a wide variety of different sources. Data might sit in CSV files on your machine, in Parquet files in a data lake, or in an operational database. DuckDB has strong support for moving data between many different data sources.

Source: DuckDB beyond the hype

Use Cases and Applications

Both ClickHouse and DuckDB serve unique purposes in data processing, offering complementary strengths for different tasks.

ClickHouse for Large-Scale, Distributed OLAP Workloads

ClickHouse excels in handling large-scale, distributed OLAP workloads. Its MPP architecture scales horizontally, making it perfect for real-time analytics over multi-terabyte datasets. It's used in industries like telecom, finance, and e-commerce where fast query performance on large datasets is crucial. Companies like Yandex and Uber leverage ClickHouse for real-time analytics, making it a top choice for enterprise-scale applications.

DuckDB for Serverless Pipelines and Local Data Processing

DuckDB is ideal for serverless pipelines and local data processing, excelling with small-to-medium datasets It's great for temporary staging in ELT jobs and data transformations, especially when dealing with Parquet and other semi-structured formats.

In embedded systems or sensor data applications, DuckDB’s columnar storage and compression make it highly efficient, processing data in tight memory constraints.

Complementary Roles in the Data Ecosystem

ClickHouse is for large, distributed workloads, while DuckDB handles smaller, local processing tasks. They complement each other, with ClickHouse powering big data and cloud-based analytics, and DuckDB simplifying local, serverless data tasks. Together, they provide a flexible, efficient data pipeline for different analytics needs.

Conclusion

In the evolving landscape of data analytics, both ClickHouse and DuckDB have carved out distinct yet complementary niches. ClickHouse has established itself as a powerhouse for large-scale, distributed OLAP workloads, making it the go-to choice for enterprise-grade deployments handling petabytes of data. DuckDB, meanwhile, has revolutionized local data analysis by offering a lightweight, embedded solution that seamlessly integrates with modern data science tools like Pandas, Polars, and Apache Arrow.

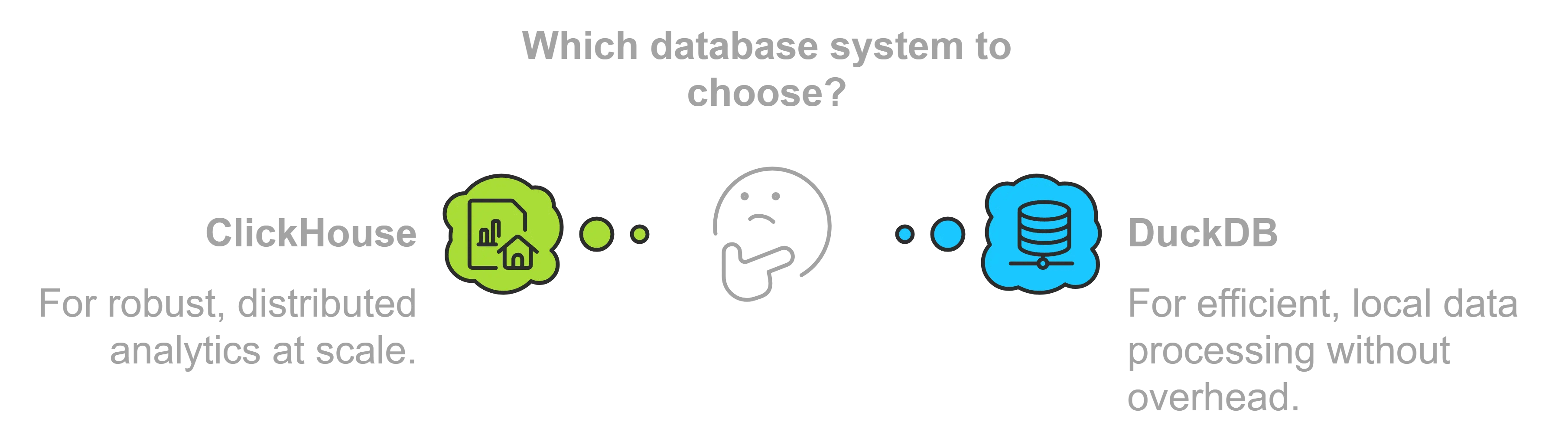

While ClickHouse excels at handling massive distributed datasets with impressive performance, DuckDB shines in scenarios requiring quick, in-process analytics and complex queries on smaller datasets. The choice between these tools ultimately depends on specific use cases: ClickHouse for organizations requiring robust, distributed analytics at scale, and DuckDB for data scientists and analysts who need efficient, local data processing without the overhead of traditional database systems.

As organizations continue to grapple with diverse data processing needs, having both tools in the modern data stack enables teams to choose the right tool for their specific analytical requirements, whether it's processing petabytes in the cloud or analyzing gigabytes on a local machine.

At the end of the day, it’s not about which one is better—it’s about choosing the right tool for the job. ClickHouse powers through big data at scale, while DuckDB gives data scientists the flexibility to run powerful queries on their own machines. Together, they form the perfect duo—the heavyweight and the lightweight—both designed to make data processing faster and more efficient.