Introduction

As the demand for ML and LLM models continues to grow, so does the need for reliability and scalability. Integrating Kubernetes into model development emerges as a powerful solution. By leveraging Kubernetes, we can streamline the process of model development, decrease costs, and enhance model reliability. This can be achieved using Ray on Kubernetes. But before that, let's take a look at the lifecycle of an ML model.

ML model lifecycle

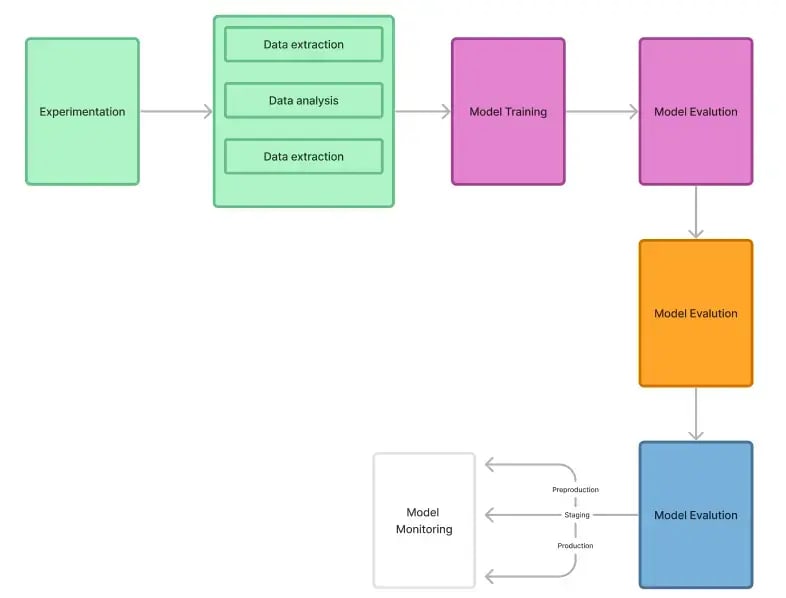

Before delving into MLOps, let's first explore the process of developing an ML model.

- Experimentation: During this phase, data scientists and ML researchers collaborate to design prototype model architectures and create a notebook environment integrated with necessary tools for model development. They identify and analyze the most suitable data for the required use case.

- Data Processing: This stage involves transforming and preparing large datasets for the ML process. Data is extracted from various sources, analyzed, and formatted to be consumed by the model.

- Model Training: ML engineers select algorithms based on the model's use case and train the model using the prepared data.

- Model Evaluation: Custom evaluation metrics are used to assess the effectiveness of the model for the given use case.

- Model Validation: This stage verifies whether the trained model is ready for deployment in the intended environment.

- Model Serving: The model is deployed to the appropriate environment using model serving techniques.

- Model Monitoring: This phase involves tracking the efficiency and effectiveness of the deployed model over time.

What is MLOps

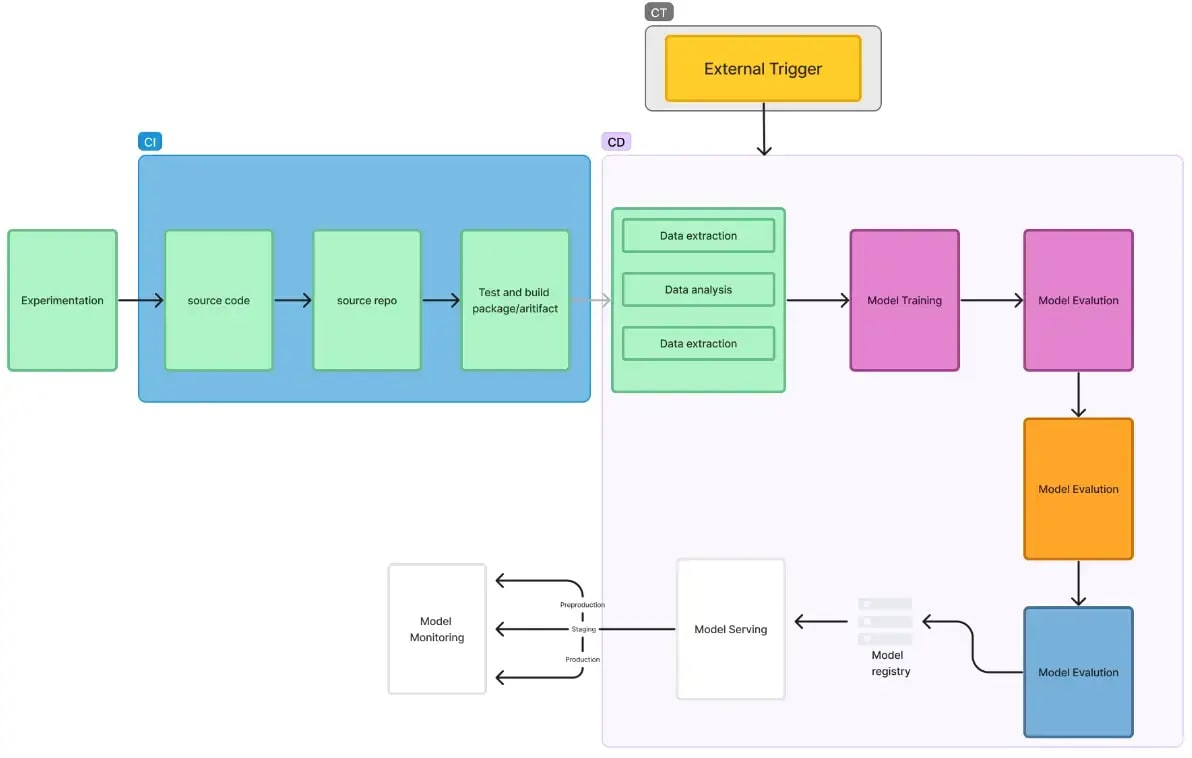

The purpose of MLOps is to simplify and automate the development of ML models. Derived from DevOps principles, MLOps leverages pipelines to streamline the process. Below is an illustration of the MLOps pipeline.

- Continuous Integration (CI): Similar to DevOps, CI involves storing our source code in a Git repository, testing it, and generating packages or artifacts necessary for the CD process.

- Continuous Deployment (CD): In the CD process, we train our model using provided data. Once the model is validated for the respective environment, we push it to a Model Registry.

- Continuous Training (CT): CT ensures that our model remains updated by training it on new incoming data. It can be externally triggered when new data is available, prompting the CD process to train and deploy a new model.

Cloud-native approach to AI/ML

Different AI models have varied dependencies, and containerizing these models offers isolation, enabling deployment using Kubernetes. Utilizing cloud-native tools in AI/ML workloads ensures reliability and scalability as needed. Tools like Keda enable event-driven scaling for workloads. Cloud-native infrastructure can access data from diverse sources such as block stores and object stores, which can then be utilized for training and retraining AI/ML models. This approach grants control over resource allocation for CPUs and GPUs. Integrating the cloud-native ecosystem with AI/ML can reduce costs by utilizing Spot Instances. In this article, we will explore a cloud-native approach to deploying an AI/ML application on a Kubernetes cluster using Kuberay, a Kubernetes solution for Ray cluster.

What is Ray?

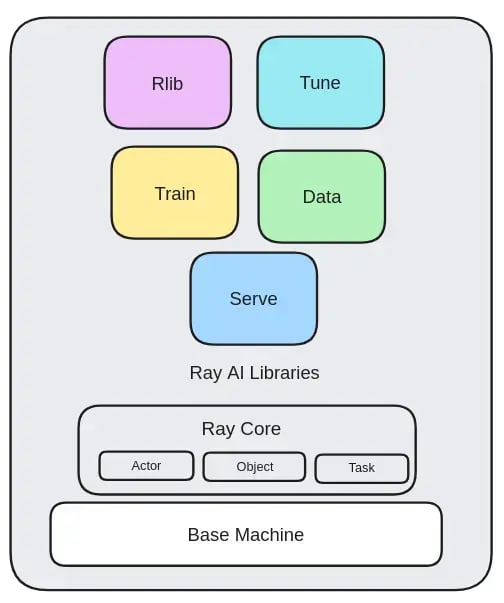

Ray is a framework designed for scaling AI/ML and Python-based applications. It offers a lightweight computing layer that facilitates parallel processing. With Ray, a distributed system is created where the burden of scaling workloads is handled automatically, freeing developers from managing scalability concerns.

- Ray AI libraries: These are Python-based open-source libraries that are used in ML applications. These libraries are Rlib, Tune, Train, Data and Serve all meant for a specific purpose.

- Data: It helps in data processing.

- Train: Used for distributed training and fine-tuning

- Tune: Used for hyperparameter tuning.

- Serve: Used for model serving.

- Rlib: Used in reinforcement learning.

- Ray Core: Ray core provides core primitives like tasks, actors and objects.

a. Task: Task enable remote function execution. This is done by adding a decoration @ray.remote. In the remote we can pass parameters to define resources for a function or we can also specify if this function requires a GPU node. Below is one example.

@ray.remote #enables remote execution of function

def metrics():

.....

.....

return a

id=metrics.remote() #executes the function remotely on worker node

pythonb. Actors: Similar way to task we have actors which are used for classes

@ray.remote

class Counter(object):

def __init__(self):

self.value=0

def increment(self):

self.value+=1

return self.value

pythonc. Objects: Task and actor works on top of objects. An object is present on each node as a remote object and we use object reference to work with those objects.

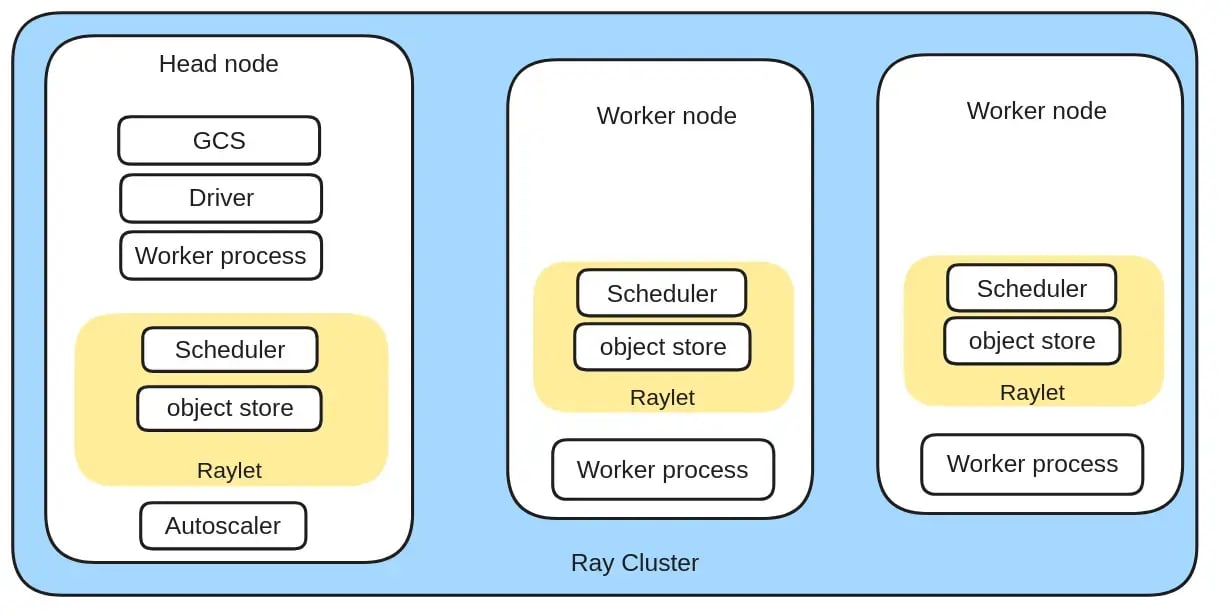

- Ray Cluster: It is a cluster consisting of a worker node with a common Ray head node, these clusters can be enabled to autoscale or configure minimum and maximum workers. Both the head node and worker node run as a pod in Kubernetes. Raycluster consists of a single Head node which is somewhat similar to the control plane in Kubernetes. The head node is responsible for scaling the worker nodes. The head node contains GCS (Global control service) which is a core component of Ray cluster, it stores cluster-level metadata. These nodes are run as a pod in a Kubernetes cluster. Raycluster can be deployed by official support on AWS, GCP and community support on Azure. Using the Open-source project kuberay by Ray we can deploy Ray cluster on any Kubernetes cluster.

-

Head node: The head node controls the worker node and decides the remote execution of the function. It consists of below components:

- Raylet: It enables sharing resources across the cluster

- Drivers: These are special functions that are executed on top of the main application

- Worker process: It helps in executing tasks and actors of Ray's core

- Autoscaler: Autoscale the worker nodes

- GCS: Store metadata related to the ray cluster

-

Worker node: The head node is responsible for managing the worker node. Below are the components in the worker node:

- Scheduler: Schedules a worker process

- Worker process: It is responsible for running your Tasks and Actors

- Object store: It is a common object store between nodes

About KubeRay project

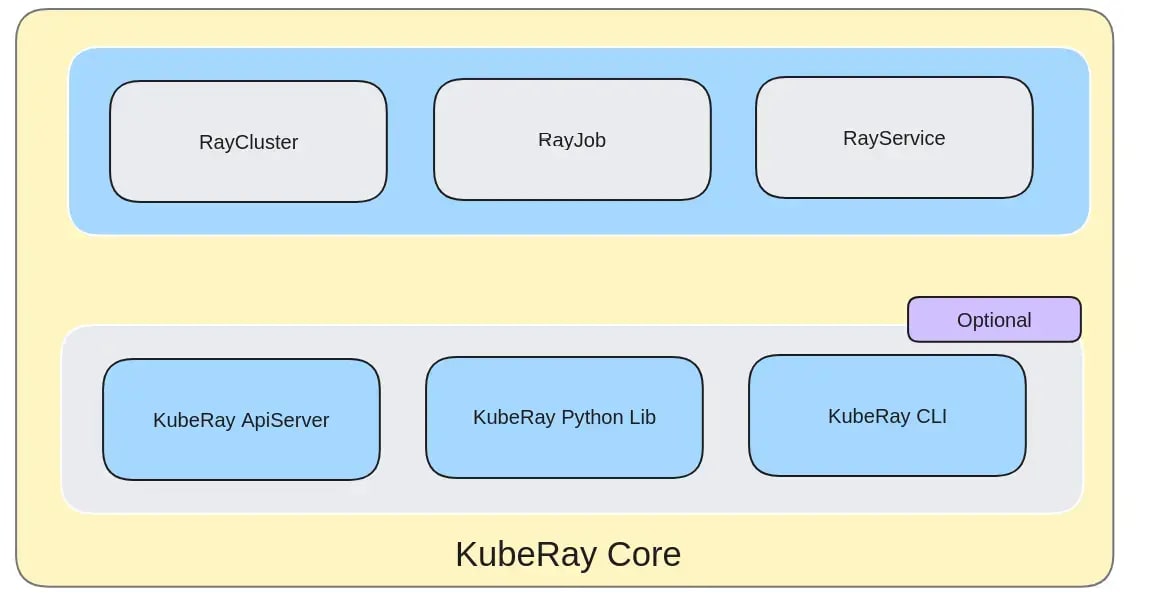

KubeRay is an open-source project by Ray to run the Ray application on the Kubernetes cluster. Kuberay is an Operator which has RayCluster, RayJob and RayService as CDRs (Custom resource definition). Kuberay can be used to manage the lifecycle of LLM and AI/ML models.

Use cases of KubeRay

- Making a complete MLOps pipeline using the native AI libraries from Ray.

- Scaling the AI/ML workload.

- Build a custom AI/ML platform on top of Kubernetes to enable the reliability of workloads.

- Training large models with Kubernetes and ray.

- Manage end to end lifecycle of ML/LLM models.

Architecture of KubeRay

The main components of KubeRay are RayCluster, RayJob and RayService other components which are KubeRay ApiServer, KubeRay Python Client and KubeRay CLI are community-managed components and are optional.

-

RayCluster: A Ray cluster manages the lifecycle of the application. It consists of a head node (pod) responsible for managing the workers. We can configure RayCluster with minimum or maximum replica, and enable autoscaling or event-based scaling using keda. Below example, you can see the specs of the worker node (pod).

-

RayJob: It is responsible for running Ray job on Raycluster. A Ray job is a Ray application that we can run on a remote Ray Cluster. We can configure a RayJob to create a RayCluster execute the job and do the cleanup by deleting the RayCluster. RayJob creates a Ray Cluster with the required head node and worker node, once the job execution is completed it deletes the RayCluster. This approach is more like a native Kubernetes Job.

-

RayService: A RayService is responsible for managing RayCluster and RayServe Applications. Enables zero downtime upgrades and high availability. It can shift traffic from an unhealthy ray cluster to a healthy ray cluster. If the head node goes down, RayService ensures that worker nodes are up and ready to accept the traffic.

-

KubeRay ApiServer: It can be used to enable the user interface for managing KubeRay resources.

-

KubeRay Python lib: It provides libraries to interact with RayCluster.

-

KubeRay CLI: It is a CLI tool that can manage resources in KubeRay.

Benefits

- Make AI/ML applications highly scalable.

- Reduce cost by using Spot instance or event-driven scaling.

- Parallel execution makes the execution of applications faster.

- Cloud-native approach enables reliability, observability and scalability.

- Seamless integration using Kubernetes.

- Kuberay can run on any cloud provider including bare metals. It just requires Kubernetes and KubeRay operators to run the Ray application.

Demo

Problem Statement

Deploying a model to give a summary of a given text.

Installation

- Kubernetes Cluster with 1 GPU node and 1 CPU node, for configuration refer to docs.

- kubectl

- Docker Engine

- Python

- Helm

- Kuberay operator and CRDs.

helm repo add kuberay https://ray-project.github.io/kuberay-helm/

helm repo update

helm install kuberay-operator kuberay/kuberay-operator --version 1.1.0

helm install raycluster kuberay/ray-cluster --version 1.1.0

bashAdd the below taint to your GPU node.

| Key | Value | Type |

|---|---|---|

| ray.io/node-type | worker | NoSchedule |

After applying taint to your GPU node, deploy the RayService.

apiVersion: ray.io/v1

kind: RayService

metadata:

name: text-summarizer

spec:

serviceUnhealthySecondThreshold: 900

deploymentUnhealthySecondThreshold: 300

serveConfigV2: |

applications:

- name: text_summarizer

import_path: text_summarizer.text_summarizer:deployment

runtime_env:

working_dir: "https://github.com/ray-project/serve_config_examples/archive/refs/heads/master.zip"

rayClusterConfig:

rayVersion: '2.7.0'

headGroupSpec:

rayStartParams:

dashboard-host: '0.0.0.0'

template:

spec:

containers:

- name: ray-head

image: rayproject/ray-ml:2.7.0

ports:

- containerPort: 6379

name: gcs

- containerPort: 8265

name: dashboard

- containerPort: 10001

name: client

- containerPort: 8000

name: serve

volumeMounts:

- mountPath: /tmp/ray

name: ray-logs

resources:

limits:

cpu: "2"

memory: "8G"

requests:

cpu: "2"

memory: "8G"

volumes:

- name: ray-logs

emptyDir: {}

workerGroupSpecs:

- replicas: 1

minReplicas: 1

maxReplicas: 10

groupName: gpu-group

rayStartParams: {}

template:

spec:

containers:

- name: ray-worker

image: rayproject/ray-ml:2.7.0

resources:

limits:

cpu: 4

memory: "16G"

nvidia.com/gpu: 1

tolerations:

- key: "ray.io/node-type"

operator: "Equal"

value: "worker"

effect: "NoSchedule"

bashTo send a request to our model lets first port-forward the service using below command.

kubectl port-forward svc/text-summarizer-serve-svc 8000

bashNow let’s create a python script to send a message to above port 8000

import requests

message = (

"A large language model (LLM) is a computational model notable for its ability to achieve general-purpose language generation and other natural language processing" +

"tasks such as classification. Based on language models, LLMs acquire these abilities by learning statistical relationships from text documents during a computationally" +

"intensive self-supervised and semi-supervised training process. LLMs can be used for text generation, a form of generative AI, by taking an input text and repeatedly" +

"predicting the next token or word."

)

print(requests.get("http://localhost:8000/summarize", params={"text": message}).json())

pythonSave the above script as summary.py. Run below command to run the script

python summary.py

pythonA large language model (LLM) is a type of computational model that

performs language generation and natural language processing tasks.

It learns from text documents during an intensive training process.

LLMs can generate text by predicting the next word in a sequence

pythonAlternatives to KubeRay

Below are some commercial and open-source alternatives to KubeRay.

Conclusion

KubeRay simplifies the management of ML/LLM models significantly. By leveraging Ray and Kubernetes, we ensure that our models scale based on demand, resulting in cost savings. Using KubeRay makes the observability of ML models much easier, we can integrate tools like Prometheus to get the metrics from our AI/ML workload and visualize them in tools like Grafana. Utilizing the parallelization process to train our ML/LLM model reduces time to market and saves on resource consumption.

If you are looking for help with ML/LLM model deployment and optimization, engage us.