Introduction

Talking about AI – its adoption is increasing multifold and in various forms, we see implementations such as AI Cloud, AI products, AI Agents, Chatbots, and Image and video generation. The global AI market is expected to be valued at approximately $621.19 billion in 2024, with projections indicating it could reach around $1.89 trillion by 2030, reflecting a compound annual growth rate (CAGR) of 20.4% during this period.

Imagine running an AI-powered SaaS product where your users consume millions of API calls and tokens daily. You’re paying for GPU usage and API requests, but your billing system isn’t keeping up. Revenue leakage and unpredictable costs become a nightmare.

Therefore, the right strategies, tools, and implementations are essential. Creating a metering and billing solution in-house can be challenging due to factors such as internal expertise, time-to-market constraints, and the fact that available products are not simply plug-and-play solutions. You must integrate effectively with your business context and tailor your metering strategy accordingly.

Various solutions exist to tackle this problem, ranging from custom-built metering systems to open-source and commercial billing platforms. These tools help businesses measure, track, and charge for AI services dynamically, ensuring that costs are aligned with actual usage.

By the end of this article, you'll know how to implement a scalable, real-time usage-based billing system for AI workloads, ensuring no revenue leakage and accurate cost tracking.

What is usage-based billing?

Usage-based billing (also known as metered billing or consumption-based pricing) is a pricing model where customers are charged based on their actual consumption of a product or service. Unlike traditional subscription-based models, where users pay a fixed fee regardless of usage, usage-based billing ensures that customers pay for what they use—whether it's API requests, data processing, storage, compute time, or AI tokens consumed.

This approach is particularly useful for cloud services, SaaS platforms, AI applications, and infrastructure providers, where demand fluctuates, and a one-size-fits-all pricing model doesn’t work.

Why is usage-based billing Important?

- Fair and Transparent Pricing : Your customers only pay for what they use, eliminating disputes over unfair pricing. This fosters trust and improves retention.

- Scalability : Businesses can attract a wider range of users, from startups to enterprises, by offering flexible pricing.

- Revenue Optimization : Providers can capture more revenue from heavy users while still accommodating smaller customers.

- Encourages Adoption : Freemium and pay-as-you-go (PAYG) models lower the barrier to entry, allowing users to try services before committing to larger usage.

- Aligns with Modern Technologies : Cloud computing, AI, and APIs generate dynamic workloads that require metered billing for cost efficiency.

Top players in usage-based billing

A variety of platforms specialize in metering, tracking, and billing for usage-based services. Here are some of the key players (not listed in any specific order):

- Metronome : A robust metering and billing platform designed for SaaS and API-driven businesses. It provides detailed analytics and revenue tracking.

- OpenMeter : An open-source metering system that enables real-time tracking of resource consumption. Ideal for companies that want full control over their billing infrastructure.

- Zenskar : Focuses on automated billing workflows with advanced pricing models and integrations for SaaS businesses.

- MeterStack : Provides a streamlined way to implement usage-based billing for cloud services and AI applications.

- Amberflo : A comprehensive metering and billing platform that helps businesses implement pay-as-you-go pricing with real-time analytics.

- Lago : An open-source alternative to proprietary billing systems, designed to give companies flexibility in tracking and billing for API consumption, AI workloads, and SaaS.

- Togai : A powerful metering and pricing platform with deep customization options, making it ideal for complex billing scenarios.

- Chargebee : A well-known subscription management and billing platform that also supports usage-based pricing.

- Zuora : A leader in subscription billing, Zuora also provides usage-based monetization capabilities for enterprises.

- Orb : Specializes in usage-based pricing models with advanced metering, analytics, and invoicing capabilities.

- M3ter : A platform focused on metering and pricing flexibility for B2B SaaS, helping businesses scale their pricing based on usage.

- Stripe : a very popular platform for invoicing and billing introduced usage-based billing some time ago.

Challenges in Usage-Based Billing

Granular Usage Tracking

- While metering tokens is straightforward, accurately tracking compute usage, bandwidth, and other system-level metrics presents a more complex challenge.

Real-Time Monitoring

- Real-time processing of usage data is essential for billing systems to prevent overcharging or undercharging. Timely calculations enhance customer experience and facilitate valuable feedback.

Custom Pricing Models

- AI applications require pay-as-you-go, tiered pricing, and overage billing models instead of fixed subscriptions.

Scalability

- High-volume AI workloads demand an event-driven architecture to track and process billing data efficiently.

Demo of usage-based billing for AI product

For our demo, we’ll be using Lago, an open-source metering and billing system, to showcase how usage-based billing can be implemented effectively. However, the concepts covered here can be applied to various billing platforms, allowing you to choose the best approach based on your specific needs.

During this demonstration, we charge according to tokens spent, but a user can also be billed by the API call, bandwidth used, time taken to process, or even on a subscription basis. This system’s versatility allows for many different metering methods based on the business requirement.

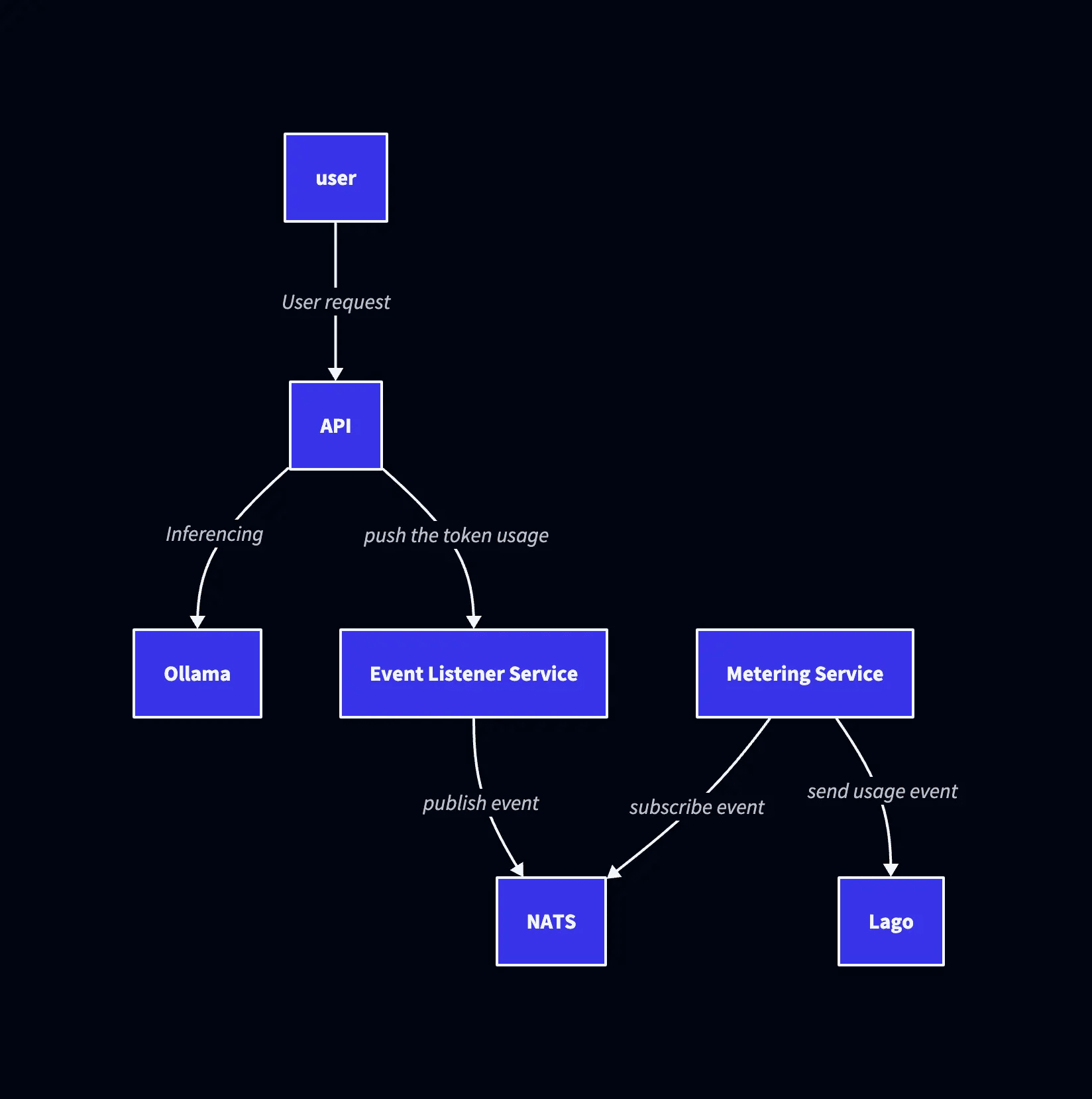

The architecture is composed of a few central building blocks that work in tandem to audit and manage consumption. The users access the Ollama (Llama 3 for this demo) Platform, which performs their queries. There is also a feature called API that tracks how many tokens each person spends and exposes APIs to get token activity and report queries.

An Event Listener Service listens for token-related activities and chronicles them. These are then sent to NATS, a message broker that lets applications communicate with each other through shared channels in real time. Metering Service listens for these events, consumes the information, and sends tokenized data to Lago, which is in charge of billing and charging users.

This configuration provides users with efficient tracking of resource spending and allows billing to be more flexible beyond tokens, to also include API calls, bandwidth, and even time spent processing in a single system.

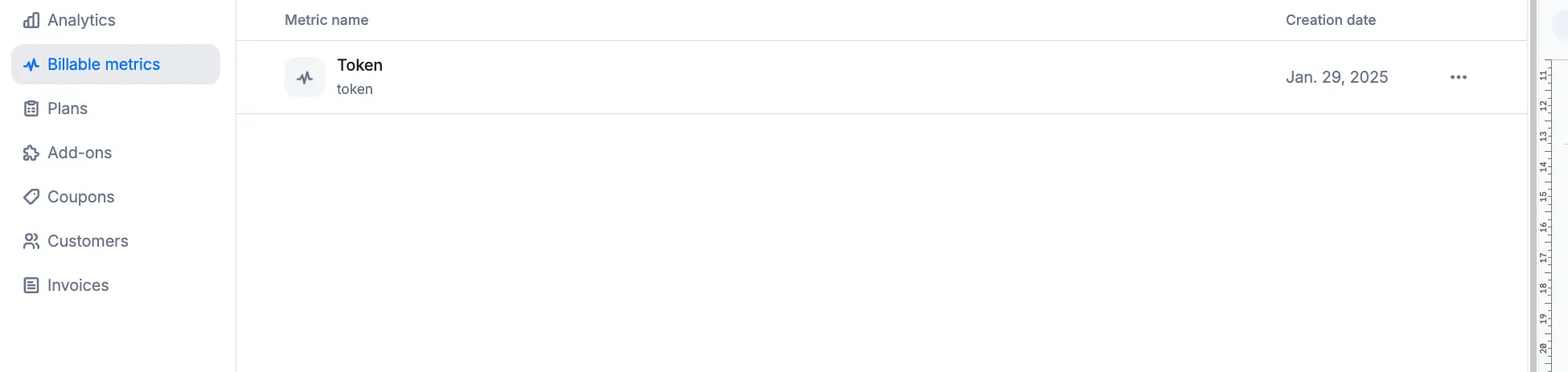

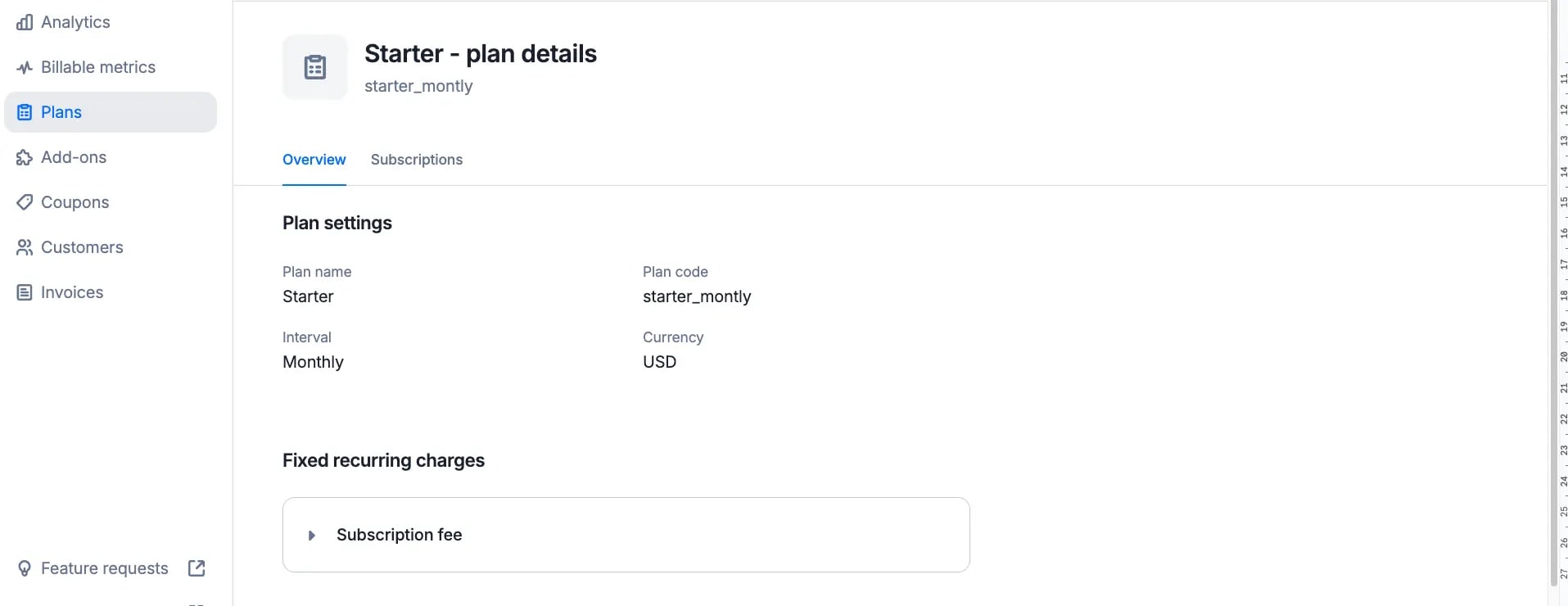

Define Token Usage as a Metered Metric

We will create a metered metric in Lago’s UI specifically for tracking token consumption as users interact with the AI model.

Configure Pricing Plans

We will define different pricing strategies based on token usage, such as pay-as-you-go models (e.g., $0.01 per 1,000 tokens) or tiered pricing to offer discounts for higher usage.

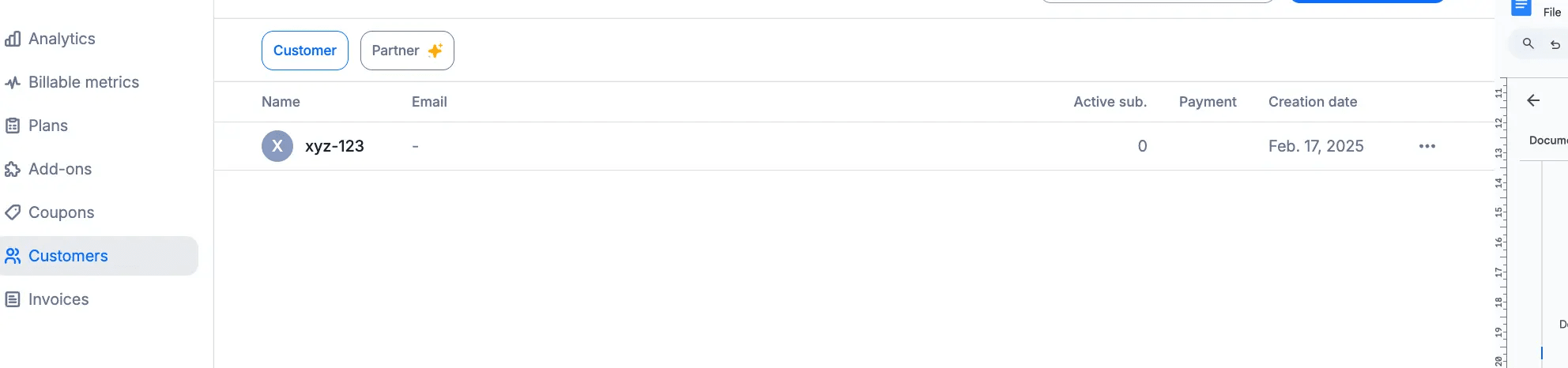

Associate Customers with Billing Plans

- Customers will be onboarded into Lago and associated with the appropriate billing plans.

- Lago will track real-time token consumption and assign it to the correct customer.

- Based on the usage data, invoices will be automatically generated to reflect the actual token consumption

Background: how this sample app works

Regarding technical implementation, the API is built in Python using LangChain to enhance token calculation accuracy. The Event Listener Service and Metering Service are developed in Golang for efficient event handling and processing. The system uses NATS as a message broker to facilitate real-time communication between services. The Metering Service processes token usage data and sends it to Lago for billing. This architecture ensures accurate tracking, scalable event processing, and flexible billing options beyond tokens, such as API calls or bandwidth usage.

API

- Send the token at

:8080/token

class TokenTrackingHandler(BaseCallbackHandler):

def __init__(self):

self.tokenizer = AutoTokenizer.from_pretrained("hf-internal-testing/llama-tokenizer")

self.query_history = [] # Store token usage history

def on_llm_start(self, serialized, prompts, **kwargs):

self.input_tokens = sum(len(self.tokenizer.encode(p)) for p in prompts)

self.current_query = {"query": prompts[0], "input_tokens": self.input_tokens, "output_tokens": 0}

def on_llm_end(self, response, **kwargs):

if response.generations:

self.output_tokens = sum(len(self.tokenizer.encode(g[0].text)) for g in response.generations)

self.current_query.update({"output_tokens": self.output_tokens, "total_tokens": self.input_tokens + self.output_tokens})

self.query_history.append(self.current_query)

# Send token usage to Go service

requests.post("http://localhost:8080/token", json={"total_tokens": self.input_tokens + self.output_tokens})

pyReal-Time Event Processing with Go and NATS

- A Go service continuously polls the token tracker and publishes token usage events to NATS (a high-performance messaging system).

- NATS ensures:

- Reliability: Guarantees token events are processed.

- Scalability: Handles large volumes of AI requests.

- Auditability: Enables historical tracking of token consumption.

// publishTokenToNATS sends the token count to NATS

func publishTokenToNATS(totalTokens string) {

// Generate a unique transaction ID

transactionID := generateUniqueTransactionID()

// Create event data

event := map[string]interface{}{

"transaction_id": transactionID,

"external_subscription_id": "<extenal_id>",

"code": "<metrics_name>",

"timestamp": fmt.Sprintf("%d", time.Now().Unix()),

"properties": map[string]interface{}{

"tokens": totalTokens,

},

}

// Convert event to JSON

eventJSON, err := json.Marshal(event)

if err != nil {

fmt.Println("Failed to marshal event:", err)

return

}

// Publish event to NATS

err = nc.Publish("events.lago", eventJSON)

if err != nil {

fmt.Println("Failed to publish event:", err)

return

}

fmt.Println("Published event:", string(eventJSON))

}

goMetering and Lago Integration

- A subscriber service listens to NATS for token usage events.

- It forwards the data to Lago for real-time billing calculation.

- Lago applies pricing rules and updates the customer’s invoice accordingly.

// handleEventMessage processes the event message and sends it to Lago

func handleEventMessage(data []byte) {

// Parse the event from the NATS message

var event map[string]interface{}

if err := json.Unmarshal(data, &event); err != nil {

fmt.Printf("Failed to parse event: %v\n", err)

return

}

// Extract necessary fields from the event

transactionID := event["transaction_id"].(string)

externalSubscriptionID := event["external_subscription_id"].(string)

code := event["code"].(string)

timestamp := event["timestamp"].(string)

properties := event["properties"].(map[string]interface{})

// Convert properties to the correct format (e.g., gb as string)

tokens := fmt.Sprintf("%v", properties["tokens"])

// Create Lago event input

eventInput := &lago.EventInput{

TransactionID: transactionID,

ExternalSubscriptionID: externalSubscriptionID,

Code: code,

Timestamp: timestamp,

Properties: map[string]interface{}{

"tokens": tokens,

},

}

// Send the event to Lago

sendEventToLago(eventInput)

}

goAutomated Customer Billing

- Lago maps token usage to customer profiles and applies relevant pricing plans.

- Invoices are generated dynamically, reflecting real-time consumption.

- Customers can access Lago’s UI or API to monitor their usage and billing.

Why This Architecture is Scalable:

- Service Independence - Each service (API, Event Listener, Metering) runs independently without direct coupling, allowing horizontal scaling by adding more instances of any service when needed.

- Message Queue Architecture - NATS handles high volumes of events between services, acting as a buffer during traffic spikes and ensuring no data loss during peak loads.

- Distributed Processing - Multiple instances of services can process requests in parallel across different machines, effectively distributing the workload and preventing single point bottlenecks.

Use cases for usage-based billing

- SaaS AI Platforms: Charge users based on token usage, API calls, or processing time.

- Multi-Tenant Enterprise Applications: Track usage across teams or projects, enabling fair cost allocation.

- AI-Powered APIs: Monetize API requests with transparent metering, considering factors like response size or execution time.

- Hybrid Pricing Models: Combine subscriptions with pay-as-you-go billing, including bandwidth, storage, or compute usage.

- Cloud Services & Infrastructure: Bill based on actual resource consumption, such as storage, network traffic, or CPU cycles.

Next steps

Everyone likes free beer but as a for-profit business, you should be aiming for zero leakage in revenues as much as possible and also provide transparent pricing, chargeback, and great user experience to your customers.

Your AI workloads are scaling your billing should too. Don’t let revenue slip through the cracks. Let’s talk about how you can implement a seamless metering and billing system today.