In today's cloud-native landscape, managing storage effectively is crucial for running stateful applications in Kubernetes. As organizations move towards containerization, the need for reliable, scalable, and performant storage solutions becomes paramount. This comprehensive guide will explore Rook-Ceph, a leading storage orchestrator for Kubernetes, and dive deep into its performance tuning aspects.

Whether you're running a small development cluster or a large production environment, understanding how to optimize Rook-Ceph can significantly impact your application's performance and reliability. We'll cover everything from basic setup to advanced tuning parameters, helping you make informed decisions about your storage infrastructure.

What is Rook?

Rook is a powerful storage solution within the Kubernetes ecosystem, providing a robust framework for managing distributed storage. Unlike traditional storage solutions that rely on proprietary hardware or specialized infrastructure, Rook integrates Ceph—a highly scalable, open-source storage platform—directly into Kubernetes through the Rook operator.

Rook turns distributed storage systems into self-managing, self-scaling, self-healing storage services. It automates the tasks of a storage administrator: deployment, bootstrapping, configuration, provisioning, scaling, upgrading, migration, disaster recovery, monitoring, and resource management.

What distinguishes Rook from other storage solutions is its cloud-native approach. While solutions like NetApp Trident or Portworx require external storage systems or proprietary components, Rook-ceph transforms standard Kubernetes worker nodes into a software-defined storage cluster. This approach eliminates the need for external storage infrastructure, reduces vendor lock-in, and provides a fully integrated storage experience within the Kubernetes environment.

Why does Rook exist?

Rook exists to solve one of the most challenging aspects of containerized applications: persistent storage. This combination solves one of containerization's most challenging problems: how to provide reliable, scalable, and performant storage in a dynamic environment.

Features of Rook

Rook provides a single platform for:

- Block storage (RBD): Ideal for databases and virtual machines.

- File storage (CephFS): Supports shared file systems.

- Object storage (RGW): Compatible with the Amazon S3 API.

Key features of Rook are:

- Storage type: Block, object and file

- Hardware agnostic: Works with any storage devices your nodes already have

- Scalability: Highly scalable

- Reliability: High availability and data protection

- Performance: High performance

- Ease of use: Easy to deploy

- Open source: Yes

- Monitoring: Comes with inbuilt dashboard & also it provided built in metrics collectors and exporters for monitoring with Prometheus and also provide Grafana Dashboard for Ceph clusters, OSDs, pools.

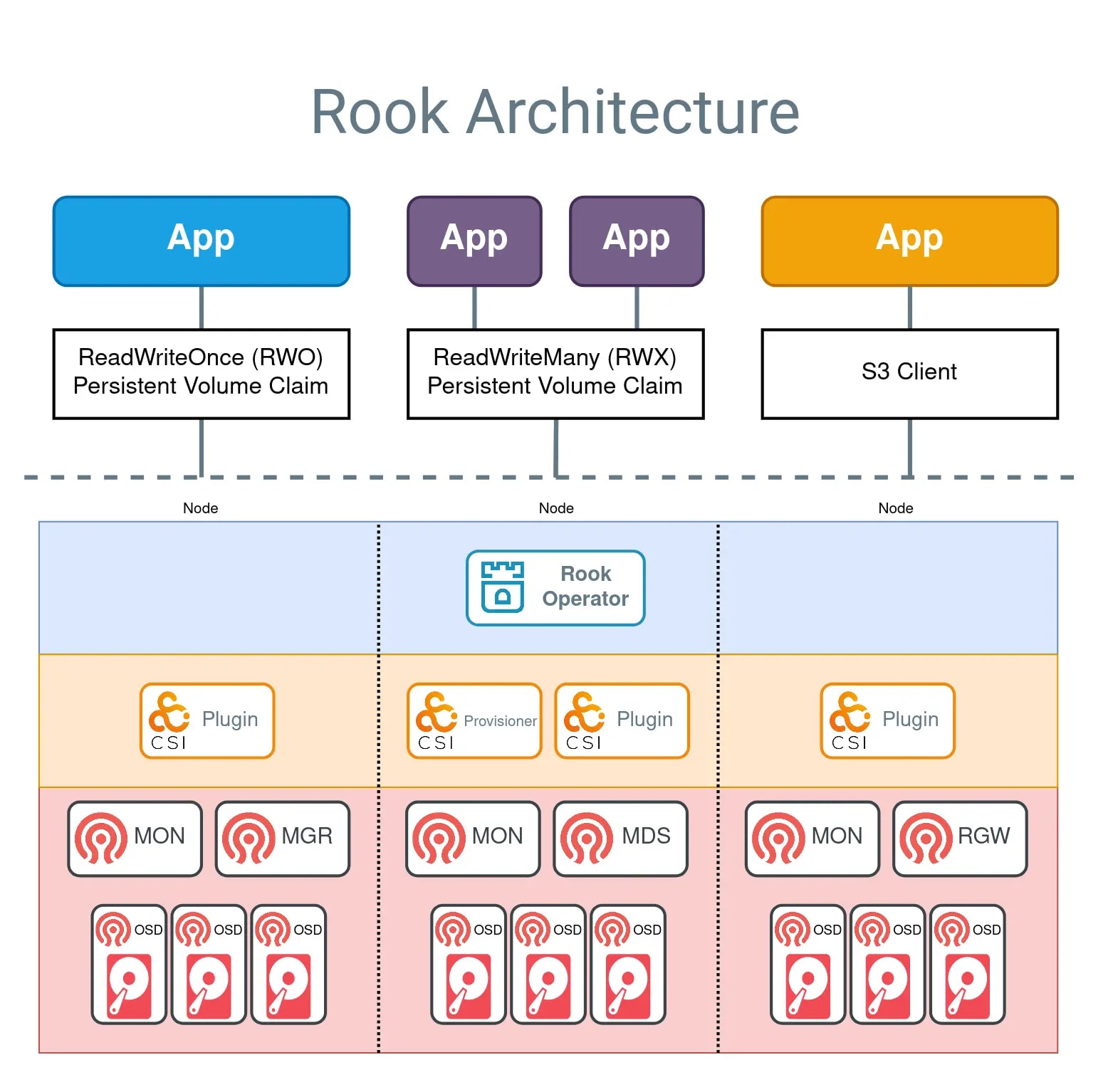

Storage architecture

Rook enables Ceph storage to run on Kubernetes using Kubernetes primitives. With Ceph running in the Kubernetes cluster, Kubernetes applications can mount block devices and filesystems managed by Rook, or can use the S3/Swift API for object storage. The Rook operator automates configuration of storage components and monitors the cluster to ensure the storage remains available and healthy.

The Rook operator is a simple container that has all that is needed to bootstrap and monitor the storage cluster. The operator will start and monitor Ceph monitor pods, the Ceph OSD daemons to provide RADOS storage, as well as start and manage other Ceph daemons. The operator manages CRDs for pools, object stores (S3/Swift), and filesystems by initializing the pods and other resources necessary to run the services.

The operator will monitor the storage daemons to ensure the cluster is healthy. Ceph mons will be started or failed over when necessary, and other adjustments are made as the cluster grows or shrinks. The operator will also watch for desired state changes specified in the Ceph custom resources (CRs) and apply the changes.

Rook automatically configures the Ceph-CSI driver to mount the storage to your pods.

Installation

This guide walks you through setting up a ceph storage cluster using Rook on Minikube, and it highlights the key changes required in your cluster manifest for a demo or test environment.

Prerequisites

kubectl: Ensure you have the latest version installed.

minikube: Version 1.23 or higher is required.

WARNING: Avoid running this demo on KIND clusters or k3s, as they have been known to cause data loss.

Start Minikube with an extra disk

Make sure your Minikube cluster is started with enough disk space and an extra disk for Rook-Ceph’s persistent storage.

On Linux

minikube start --disk-size=40g --extra-disks=1 --driver kvm2

bashOn macOS

minikube start --disk-size=40g --extra-disks=1 --driver hyperkit

bashDeploy the Rook-Ceph Operator

git clone --single-branch --branch master https://github.com/rook/rook.git

cd rook/deploy/examples

kubectl create -f crds.yaml -f common.yaml -f operator.yaml

bashAfter applying these files, verify that the Rook-Ceph operator pod is in the Running state:

kubectl -n rook-ceph get pod

bashDeploy the Rook-Ceph cluster

kubectl create -f cluster-test.yaml

bashDeploy the StorageClass for the block storage(RBD).

kubectl create -f storageclass.yaml

bashDeploy the PVC and a Sample Application

In this step, you'll create a PersistentVolumeClaim (PVC) that uses the Rook-Ceph-block StorageClass and deploy a sample application (using an Nginx container) that mounts this volume. This helps confirm that your Ceph block storage is working properly and that the volume is correctly attached to the pod.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ceph-block-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: Rook-Ceph-block

resources:

requests:

storage: 5Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: ceph-demo-deployment

spec:

replicas: 1

selector:

matchLabels:

app: ceph-demo

template:

metadata:

labels:

app: ceph-demo

spec:

containers:

- name: demo-container

image: nginx:latest

volumeMounts:

- name: ceph-storage

mountPath: /usr/share/nginx/html

volumes:

- name: ceph-storage

persistentVolumeClaim:

claimName: ceph-block-pvc

yamlOnce applied, check the PVC status to ensure it is bound:

kubectl get pvc ceph-block-pvc

bashValidate the mounted volume

Validate the mounted Ceph block storage by executing into the pod kubectl exec -it <pod-name> -- /bin/sh and running df -h to check if the volume is listed.

Best practices: Rook-Ceph on Kubernetes

Storage media selection (HDD vs. SSD/NVMe)

The choice of storage media impacts Rook-Ceph's performance. Try to use high performance SSDs.

For OSDs (Object Storage Daemons):

- SSDs/NVMe drives: Ideal for Rook-Ceph journals/WAL (Write-Ahead Log) and DB partitions. They deliver significantly higher IOPS and lower latency compared to HDDs, which is crucial for metadata-intensive operations.

- HDDs: Can be used for the actual data storage if cost is a concern, especially for large data sets where throughput matters more than IOPS.

For MONs and MGRs:

- SSDs are strongly recommended as MONs handle critical cluster metadata that requires quick access.

- Separate disks for MONs from OSDs to prevent resource contention.

Planning and Distributing Rook-Ceph MONs Across Nodes for High Availability

The Rook-Ceph monitors (mons) are the brains of the distributed cluster. They control all of the metadata that is necessary to store and retrieve your data as well as keep it safe. If the monitors are not in a healthy state you will risk losing all the data in your system.

So we need to have multiple mons work together to provide redundancy by each keeping a copy of the metadata. For highest availability, an odd number of mons is required eg. 3,5. Fifty percent of mons will not be sufficient to maintain quorum. If you had two mons and one of them went down, you would have 1/2 of quorum.

For production environments, it is recommended to have at least three nodes, as each MONS should be placed on a separate node. Below the configuration for cluster.yaml

spec:

mon:

allowMultiplePerNode: false

count: 3

yamlHardware Resource Requirements and Requests

Kubernetes can watch the system resources available on nodes and can help schedule applications—such as the ceph daemons—automatically. Kubernetes uses Resource Requests to do this. For Rook, we are notably concerned about Kubernetes' scheduling of ceph daemons.

Kubernetes has two Resource Request types: Requests and Limits. Requests govern scheduling, and Limits instruct Kubernetes to kill and restart application Pods when they are over-consuming given Limits.

When there are ceph hardware requirements, treat those requirements as Requests, not Limits. This is because all Ceph daemons are critical for storage, and it is best to never set Resource Limits for Ceph Pods. If Ceph Daemons are over-consuming Requests, there is likely a failure scenario happening. In a failure scenario, killing a daemon beyond a Limit is likely to make an already bad situation worse. This could create a “thundering herds” situation where failures synchronize and magnify.

Generally, storage is given minimum resource guarantees, and other applications should be limited so as not to interfere. This guideline already applies to bare-metal storage deployments, not only for Kubernetes.

As you read on, it is important to note that all recommendations can be affected by how ceph daemons are configured. For example, any configuration regarding caching. Keep in mind that individual configurations are out of scope for this document.

Resource Requests - MON/MGR

Resource Requests for MONs and MGRs are straightforward. MONs try to keep memory usage to around 1 GB — however, that can expand under failure scenarios. We recommend 4 GB RAM and 4 CPU cores.

Recommendations for MGR nodes are harder to make, since enabling more modules means higher usage. We recommend starting with 2 GB RAM and 2 CPU cores for MGRs. It is a good idea to look at the actual usage for deployments and do not forget to consider usage during failure scenarios.

MONs:

- Request 4 CPU cores

- Request 4GB RAM (2.5GB minimum)

MGR:

- Memory will grow the more MGR modules are enabled

- Request 2 GB RAM and 2 CPU cores

Resource Requests - OSD CPU

Recommendations and calculations for OSD CPU are straightforward.

Hardware recommendations:

- 1 x 2GHz CPU Thread per spinner

- 2 x GHz CPU Thread per SSD

- 4 x GHz CPU Thread per NVMe

Examples:

- 8 HDDS journaled to SSD – 10 cores / 8 OSDs = 1.25 cores per OSD

- 6 SSDs without journals – 12 cores / 6 OSDs = 2 cores per OSD

- 8 SSDs journaled to NVMe – 20 cores / 8 OSDs = 2.5 cores per OSD

Note that resources are applied cluster-wide to all OSDs. If a cluster contains multiple OSD types, you must use the highest Requests for the whole cluster. For the examples below, a mixture of HDDs journaled to SSD and SSDs without journals would necessitate a Request for 2 cores.

Resource Requests - OSD RAM

There are node hardware recommendations for OSD RAM usage, and this needs to be translated to RAM requests on a per-OSD basis. The node-level recommendation below describes osd_memory_target. This is a Ceph configuration that is described in detail further on.

- Total RAM required = [number of OSDs] x (1 GB + osd_memory_target) + 16 GB

Ceph OSDs will attempt to keep heap memory usage under a designated target size set via the osd_memory_target configuration option. Ceph’s default osd_memory_target is 4GB, and we do not recommend decreasing the osd_memory_target below 4GB. You may wish to increase this value to improve overall Ceph read performance by allowing the OSDs to use more RAM. While the total amount of heap memory mapped by the process should stay close to this target, there is no guarantee that the kernel will actually reclaim memory that has been unmapped.

For example, a node hosting 8 OSDs, memory Requests would be calculated as such:

- 8 OSDs x (1GB + 4GB) + 16GB = 56GB per node

Allowing resource usage for each OSD:

- 56GB / 8 OSDs = 7GB

Ceph has a feature that allows it to set osd_memory_target automatically when a rook OSD Resource Request is set. However, Ceph sets this value 1:1 and does not leave overhead for waiting for the kernel to free memory. Therefore, we recommend setting osd_memory_target in Ceph explicitly, even if you wish to use the default value. Set rook’s OSD resource requests accordingly and to a higher value than osd_memory_target by at least an additional 1GB. This is so Kubernetes does not schedule more applications or Ceph daemons onto a node than the node is likely to have RAM available for.

OSD RAM Resource Requests come with the same cluster-wide Resource Requests note as for OSD CPU. Use the highest Requests for a cluster consisting of multiple different configurations of OSDs.

Resource Requests - Gateways

For gateways, the best recommendation is to always tune your workload and daemon configurations. However, we do recommend the following initial configurations:

RGWs:

- 6-8 CPU cores

- 64 GB RAM (32 GB minimum – may only apply to older "civetweb" protocol)

Note: The numbers below for RGW assume a lot of clients connecting. Thus they might not be the best for your scenario. The RAM usage should be lower for the newer “beast” protocol as opposed to the older “civetweb” protocol.

MDS:

- 2.5 GHz CPU with a least 2 cores

- 3GB RAM

NFS-Ganesha:

- 6-8 CPU cores (untested, high estimate)

- 4GB RAM for default settings (settings hardcoded in rook presently)

Network Considerations

Network latency and bandwidth are often the primary bottlenecks in Ceph performance:

-

Dedicated network: Consider using a dedicated network for OSD for communication to avoid interference from other traffic. Enable

host networkingsettings &define the subnets to use for public and private OSD networks. -

Jumbo frames: Enable jumbo frames (MTU 9000) on your network switches and NICs to reduce overhead for large data transfers. (for baremetal)

Ceph placement group(pg)

Make sure the pg_autoscaler is enabled. Nautilus introduced the PG auto-scaler mgr module capable of automatically managing PG and PGP values for pools. In (v15.2.x) and newer, module pg_autoscaler is enabled by default without the mentioned setting.

You can verify by running the following command: [Note: kubectl Rook-Ceph plugins needs to be installed]

kubectl Rook-Ceph ceph osd pool autoscale-status

bashPlease see Ceph New in Nautilus: PG merging and autotuning for more information about this module.

Log levels

Raise the Rook log level to DEBUG for initial deployment and for upgrades, as it will help with debugging problems that are more likely to occur at those times.

When you deploy Rook operator using helm chart, you can set the log level using the logLevel=ROOK_LOG_LEVEL="DEBUG"

or if you are deployeing operator using manifest file make sure to set rook congigmap ROOK_LOG_LEVEL="DEBUG" Rook-Ceph-operator-config.

How to debug Rook

Benchmarking

You can use Flexible IO Tester, to measure the IO performance & latency. You can read this blog written by Travis Nielsen who is using EBS to see the performance.

Performance profiling

There are some cases where the debug logs are not sufficient to investigate issues like high CPU utilization of a Ceph process. In that situation, coredump and perf information of a Ceph process is useful to be collected which can be shared with the Ceph team in an issue. Capturing backtraces at different times helps identify stalls, deadlocks, or performance bottlenecks. Here is the official docs to collect perf data of a ceph process at runtime

Kubectl plugin (Rook-Ceph)

The Rook-Ceph kubectl plugin is a tool to help troubleshoot your Rook-Ceph cluster.

Here are a few of the operations that the plugin will assist with:

- Health of the Rook pods

- Health of the Ceph cluster

- Create "debug" pods for mons and OSDs that are in need of special Ceph maintenance operations

- Restart the operator

- Purge an OSD

- Run any ceph command

Conclusion

Rook-Ceph provides a powerful, flexible storage solution for Kubernetes environments, but proper tuning is essential to achieve optimal performance and reliability. By following the best practices outlined in this guide—from hardware selection and network configuration to monitoring and disaster recovery — you can build a robust, high-performance Ceph storage cluster that meets your application requirements, particularly useful if you are running database or AI workload on Kubernetes.

Remember that storage tuning is an ongoing process. Regularly monitor your cluster's performance, review logs, and adjust parameters as your workload evolves. The time invested in proper configuration and maintenance will pay dividends in system reliability and performance.

Need expert guidance on optimizing storage for Kubernetes? Talk to us for a free consultation and get tailored solutions for your infrastructure.